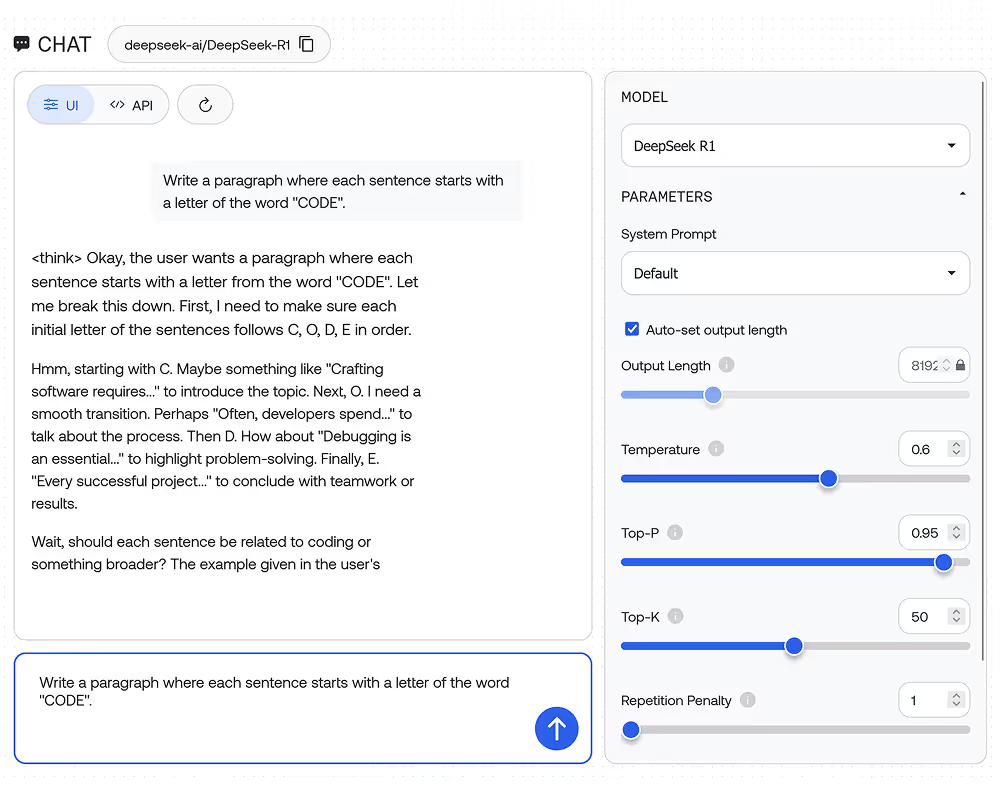

Run inference

with an API call

Use our fast API to run inference on 200+ open-source models powered by the Together Inference Stack.

200+ generative AI models

Build with open-source and specialized multimodal models for chat, images, code, and more. Migrate from closed models with OpenAI-compatible APIs.

Inference that is fast, simple, and scales as you grow.

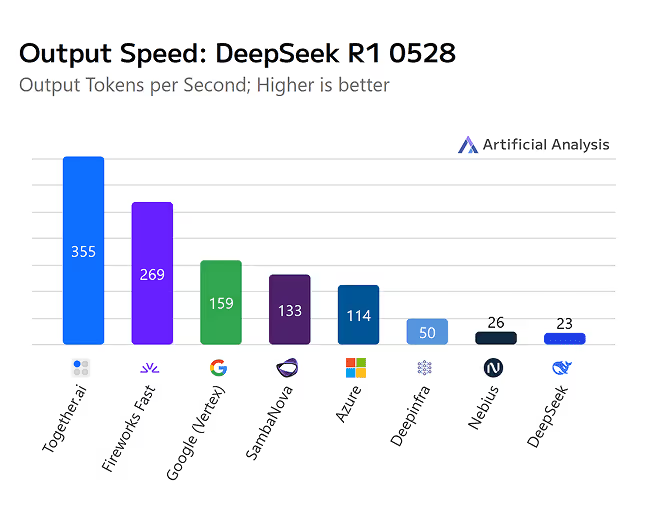

Fast

Run leading open-source models like DeepSeek and Llama on the fastest inference engine available, up to 4x faster than vLLM.

Outperforms Amazon Bedrock, and Azure AI by over 2x.

COST-EFFICIENT

Together Inference is 11x lower cost than GPT-4o when using Llama 3.3 70B and 9x lower cost than OpenAI o1 when using DeepSeek-R1. Our optimizations bring you the best performance at the lowest cost.

scalable

We obsess over system optimization and scaling so you don’t have to. As your application grows, capacity is automatically added to meet your API request volume.

Run fast Serverless Endpoints for leading open-source models

Powered by the Together Inference Stack

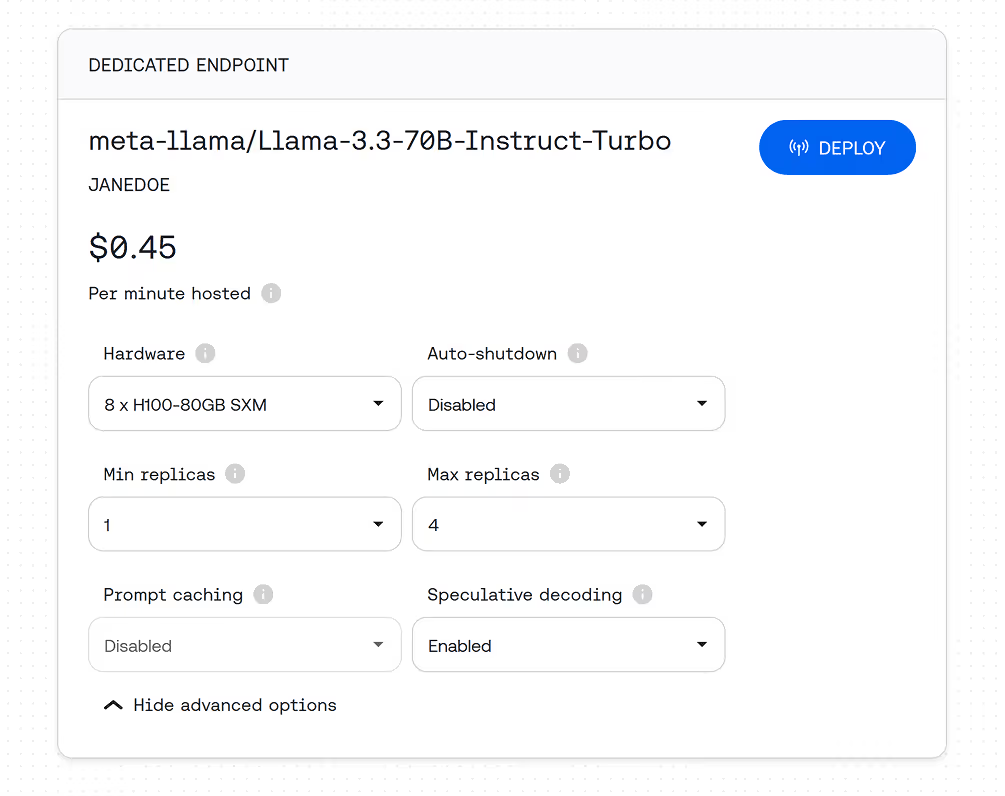

Built by AI researchers for AI innovators, the Together Inference Stack gives you the fastest NVIDIA GPUs running our proprietary Inference Engine, optimized by Together Kernel Collection and customized to your traffic.

Fast Inference Engine

Running on the latest NVIDIA GPUs with custom optimizations, our Inference Engine offers inference that's 4x faster than vLLM.

Together Kernel Collection

The Together Kernel Collection, from our Chief Scientist and FlashAttention creator Tri Dao, provides up to 10% faster training and 75% faster inference.

Customized to Your Traffic Profile

Together AI’s Research team will fine-tune and optimize your deployment using our proprietary optimizations such as custom speculators.

""Together AI offers optimized performance at scale, and at a lower cost than closed-source providers – all while maintaining strict privacy standards."

- Vineet Khosla, CTO, The Washington Post

Performance

You get more tokens per second, higher throughput and lower time to first token. And, all these efficiencies mean we can provide you compute at a lower cost.

SPEED RELATIVE TO VLLM

LLAMA 3.3 70B

DEPLOYED ON 2x h100

COST RELATIVE TO GPT-4o

Flexible deployment options

Together Cloud

- Get started quickly with fully managed serverless endpoints with pay-per-token pricing

- Dedicated GPU endpoints with autoscaling for consistent performance

Your Cloud

- Dedicated serverless deployments in your cloud provider

- VPC deployment available for additional security

- Use your existing cloud spend

.avif)

Together

GPU Clusters- For large-scale inference workloads or foundation model training

- NVIDIA H100 and H200s clusters with Infiniband and NVLink

- Available with Together Training and Inference Engines for up to 25% faster training and 75% faster inference than PyTorch

Customer Stories

See how we support leading teams around the world. Our customers are creating innovative generative AI applications, faster.

.webp)