Level up your AI enterprise

Leading enterprises use Together AI for their full generative AI lifecycle: training, fine-tuning, and inference.

Trusted by

Maximize your generative AI investments and take control over your models

- 01

.avif)

Run production-grade inference

Run popular models like Llama or Qwen, or your own custom model, on our highly scalable Together Inference Engine. Deploy on our serverless or dedicated endpoints, inside your VPC or on Together GPU Clusters to match your workload needs.

- 02

Fine-tune and experiment easily

Easily fine-tune and deploy new models for testing. Manage and orchestrate all your models in a single place. Quickly iterate and test different configurations for optimal performance.

- 03

Optimize performance & cost

Achieve the lowest price and latency, with the best accuracy, for your use case. Automatically fine-tune models, and use adaptive speculators and model distillation to drive better performance and costs for your models.

“We’ve been thoroughly impressed with the Together Enterprise Platform. It has delivered a 2x reduction in latency (time to first token) and cut our costs by approximately a third. These improvements allow us to launch AI-powered features and deliver lightning-fast experiences faster than ever before.”

- Caiming Xiong , VP Salesforce AI Research

Flexible deployment options

Together Cloud

- Get started quickly with fully managed serverless endpoints with pay-per-token pricing

- Dedicated GPU endpoints with autoscaling for consistent performance

Your Cloud

- Dedicated serverless deployments in your cloud provider

- VPC deployment available for additional security

- Use your existing cloud spend

.avif)

Together

GPU Clusters- For large-scale inference workloads or foundation model training

- NVIDIA H100 and H200s clusters with Infiniband and NVLink

- Available with Together Training and Inference Engines for up to 25% faster training and 75% faster inference than PyTorch

Enterprise-grade security and data privacy

We take security and compliance seriously, with strict data privacy controls to keep your information protected. Your data and models remain fully under your ownership, safeguarded by robust security measures.

Together Inference

Best combination of performance, accuracy & cost at production scale so you don't have to compromise.

SPEED RELATIVE TO VLLM

LLAMA-3 8B AT FULL PRECISION

COST RELATIVE TO GPT-4o

Choose from best-in-class open-source models like Llama 3.2 and Qwen2.5, or bring your own model. Our platform supports open-source, proprietary, and custom models for any use cases — text, image, vision, and multi-modal.

Get started with our serverless APIs. We optimize every model to run for the best performance and price.

Spin up dedicated endpoints for any model with 99.9% SLAs

Conifgurable auto scaling – as your traffic grows, capacity is automatically added to meet your API request volume.

Choose from 200+ models or bring your own

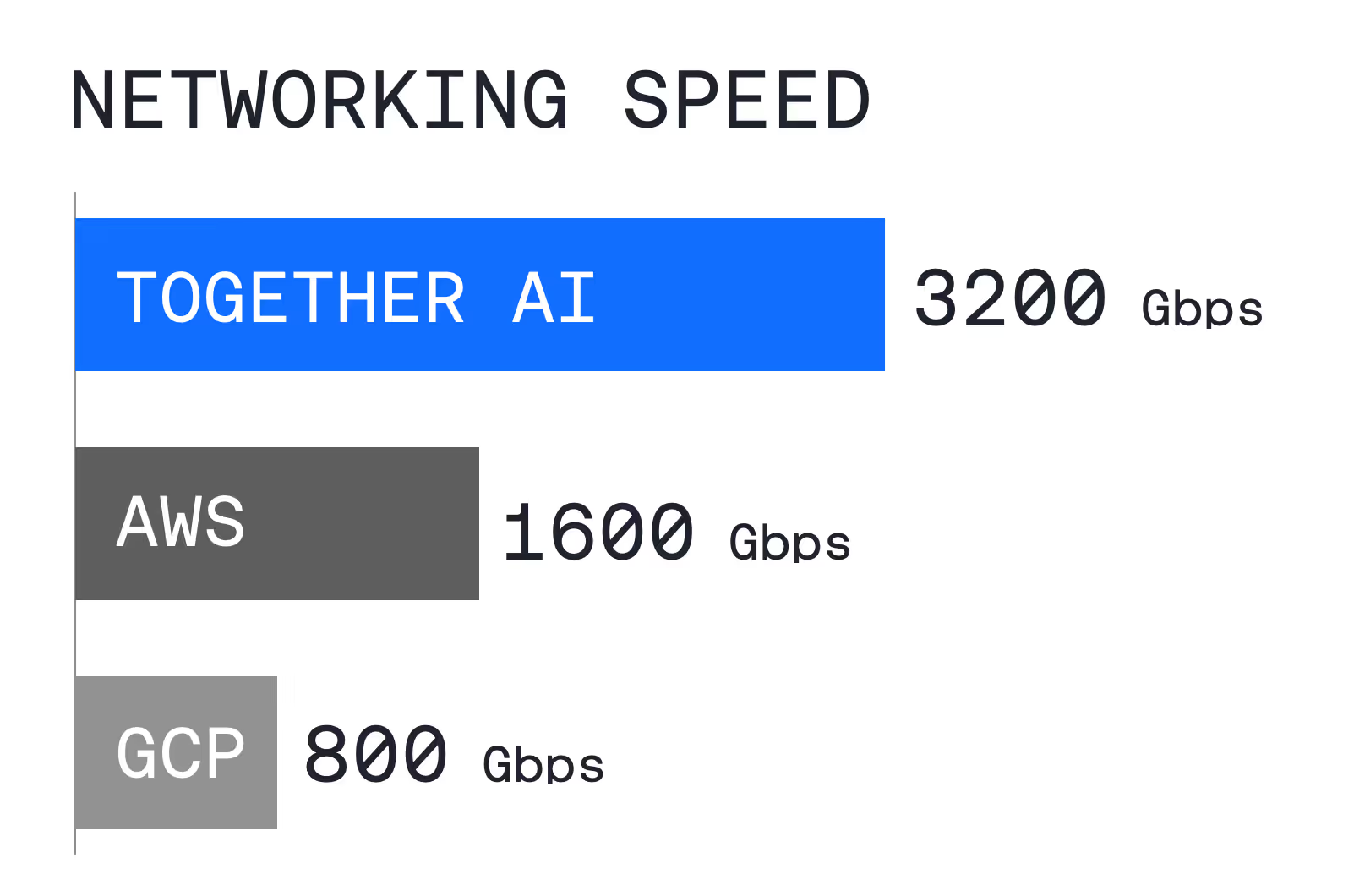

Highly reliable GPU clusters for large-scale inference and foundation model training

Top spec NVIDIA H100 and H200s available – our GPUs undergo a rigorous acceptance testing process to ensure less failures.

Deploy with Together Training and Inference engines for 25% faster training, and 75% faster inference than PyTorch

Our proprietary engines have been built by leading researchers who created innovations like Flash Attention.

Dedicated support and reliability

Get expert support with a 99.9% SLA and a dedicated customer success representative for seamless deployment and optimization.

Hear from our customers

"Together AI offers optimized performance at scale, and at a lower cost than closed-source providers – all while maintaining strict privacy standards. As an AI-forward publication, we look forward to expanding our collaboration with Together AI for larger-scale in-house efforts.”

– Vineet Khosla, CTO for The Washington Post

"Our endeavor is to deliver exceptional customer experience at all times. Together AI has been our long standing partner and with Together Inference Engine 2.0 and Together Turbo models, we have been able to provide high quality, fast, and accurate support that our customers demand at tremendous scale."

– Rinshul Chandra, COO, Food Delivery, Zoma

Ready to

in your organization? Contact our sales team to discuss your

needs and

options.

.avif)