Deploy models on custom hardware

Customize your own dedicated GPU instance to reliably deploy models with unmatched price-performance at scale.

Performance and reliability for production scale

Together Dedicated Endpoints allow you to customize your own dedicated GPU instance to reliably deploy models and achieve cost savings at scale.

Get full control of your deployment

Customize your single-tenant deployment powered by the latest NVIDIA GPU hardware and optimized by innovations like speculative decoding.

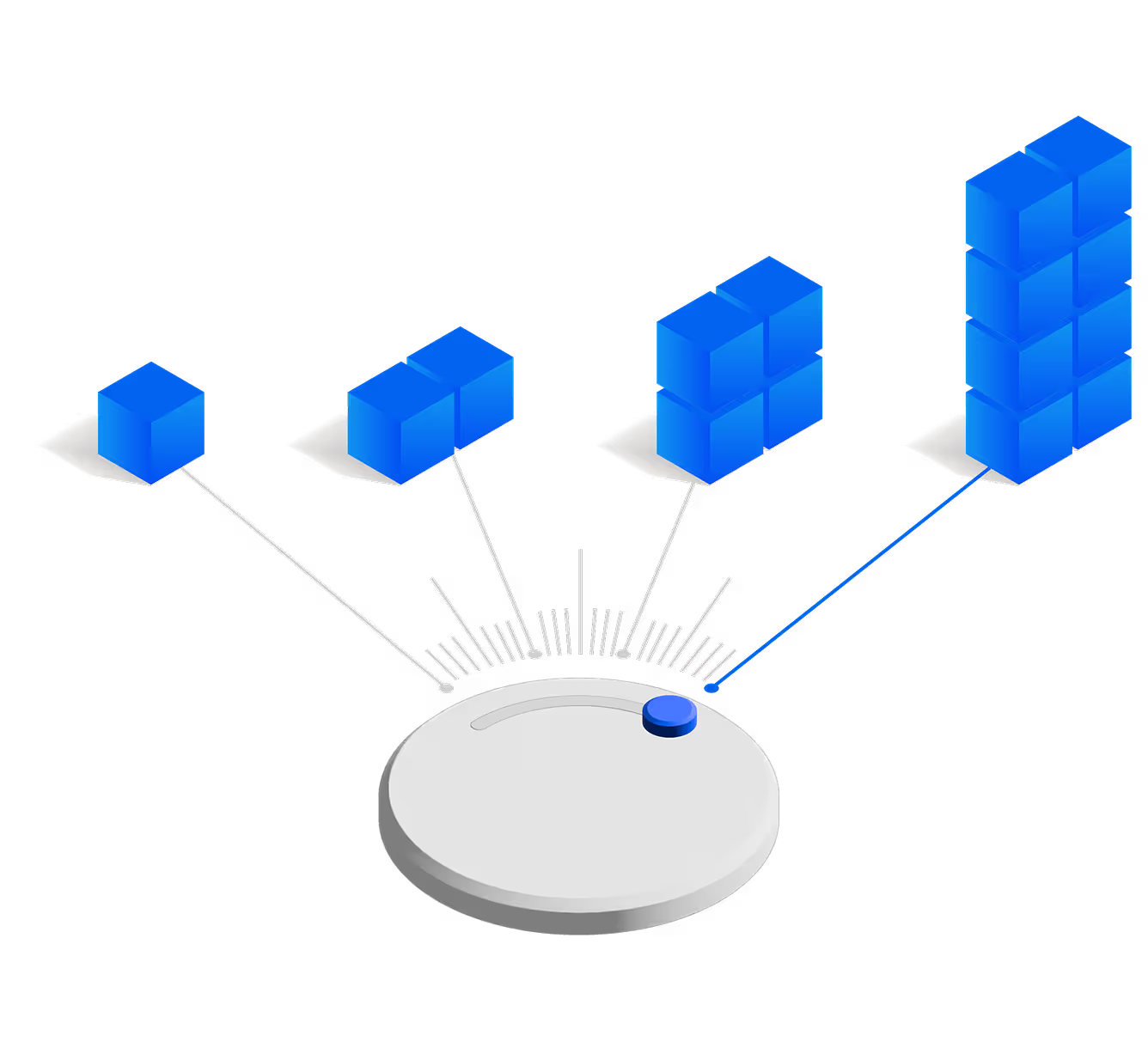

Handle traffic spikes seamlessly

Thanks to our flexible vertical and horizontal scaling options, your deployment will always meet traffic demands, even during spikes.

Achieve cost savings at scale

Leverage per-minute billing to achieve economies of scale for workload volumes generally higher than 130,000 tokens/minute.

"Together AI’s Dedicated Endpoints give us precise control over latency, throughput, and concurrency—allowing us to serve more than 10 million monthly active users with BLACKBOX AI autonomous coding agents. The flexibility of autoscaling, combined with exceptional engineering support, has been crucial in accelerating our growth."

- Robert Rizk, Co-Founder and CEO of BLACKBOX AI

Available now for the top open-source models

Chat

DeepSeek-R1-0528

Upgraded DeepSeek-R1 with better reasoning, function calling, and coding, using 23K-token thinking to score 87.5% on AIME.

TRY THIS MODEL

Chat

Llama 3.3 70B

The Meta Llama 3.3 multilingual large language model (LLM) is a pretrained and instruction tuned generative model in 70B (text in/text out). The Llama 3.3 instruction tuned text only model is optimized for multilingual dialogue use cases and outperform many of the available open source and closed chat models on common industry benchmarks.

TRY THIS MODEL

Chat

Gemma 3 27B

Lightweight model with vision-language input, multilingual support, visual reasoning, and top-tier performance per size.

TRY THIS MODEL

Code

Devstral Small 2505

24B coding model by Mistral AI & All Hands AI built for advanced agentic code tasks, topping SWE-bench scores.

TRY THIS MODEL

Code

Magistral Small 2506

24B‑parameter open‑source reasoning model from Mistral AI, fine‑tuned and RL‑trained for strong math, coding, and multilingual reasoning

TRY THIS MODEL

Chat

Qwen2.5 72B

Powerful decoder-only models available in 7B and 72B variants, developed by Alibaba Cloud's Qwen team for advanced language processing.

TRY THIS MODEL

Chat

Cogito V1 Preview Llama 70B

Best-in-class open-source LLM trained with IDA for alignment, reasoning, and self-reflective, agentic applications.

TRY THIS MODEL

Chat

Llama 3.1 8B

The Meta Llama 3.1 collection of multilingual large language models (LLMs) is a collection of pre-trained and instruction tuned generative models in 8B, 70B and 405B sizes, that outperform many available open source and closed chat models on common industry benchmarks.

TRY THIS MODEL

Chat

Cogito V1 Preview Qwen 32B

Best-in-class open-source LLM trained with IDA for alignment, reasoning, and self-reflective, agentic applications.

TRY THIS MODEL

Chat

Mixtral 8x7B Instruct v0.1

The Mixtral-8x7B Large Language Model (LLM) is a pretrained generative Sparse Mixture of Experts.

TRY THIS MODEL

Moderation

VirtueGuard Text Lite

AI guardrail model covering 12 risk categories with 8ms latency and 89% F1 accuracy, outperforming AWS Bedrock and Azure by 50x speed while reducing false positives.

TRY THIS MODEL

Chat

Llama 3.1 70B

The Meta Llama 3.1 collection of multilingual large language models (LLMs) is a collection of pre-trained and instruction tuned generative models in 8B, 70B and 405B sizes, that outperform many available open source and closed chat models on common industry benchmarks.

TRY THIS MODEL

Chat

NIM Llama 3.1 8B Instruct

NVIDIA NIM for GPU accelerated Llama 3.1 8B Instruct inference through OpenAI compatible APIs.

TRY THIS MODEL

Chat

DeepSeek-R1-0528 Throughput

Throughput DeepSeek-R1 is a state of the art reasoning model trained with reinforcement learning. It delivers strong performance on math, code, and logic tasks – comparable to OpenAI-o1. It is especially good at tasks like code review, document analysis, planning, information extraction, and coding.

TRY THIS MODEL

Chat

MiniMax M1 40K

456B-parameter hybrid MoE reasoning model with 40K thinking budget, lightning attention, and 1M token context for efficient reasoning and problem-solving tasks.

TRY THIS MODEL

Pick the deployment that fits your needs

Together AI offers the most comprehensive deployment options for inference, ensuring you get the right balance of flexibility, performance, and cost-efficiency.

Serverless Endpoints

The simplest way to run inference.

✔ API ready for 200+ models.

✔ Simplest setup.

✔ Highest flexibility.

✔ Pay-per-token pricing.

On-Demand Dedicated

Configurable dedicated GPU instances.

✔ Guaranteed performance (single-tenant).

✔ Support for custom models.

✔ Full control & customizability.

✔ Pay for GPU runtime.

Monthly Reserved

Reserved GPUs with discounts at scale.

✔ Reserved capacity for 1+ months.

✔ Fully custom setup.

✔ Secure enterprise deployments.

✔ Discounted upfront payment.

Configure and deploy in seconds with our API

Use our API and CLI to set up, deploy and manage your dedicated endpoints without worrying about the GPU infrastructure.

Access top open-source models

Use our CLI to quickly get a list of the available open-source models you can deploy on a dedicated endpoint.

Select from a wide range of the top-performing NVIDIA GPUs

Get a comprehensive list of all available GPU configurations for any given model. Select from leading NVIDIA GPUs including H200, H100, and many more.

Configure the endpoint for your needs

Customize your endpoint by setting a number of options that give you full control over your deployment.

Ensure consistent capacity with a GPU count, set replicas to get automatic horizontal scaling, and enable or disable optimizations for your deployment.

Get guaranteed performance with our highly optimized hardware

- 01

Leverage cutting-edge GPUs

Configure your custom endpoint by picking from a wide selection of powerful NVIDIA GPU hardware (including H200, H100) and configure your deployment to match your needs.

- 02

Customize your deployment

Configure a deployment that fits your needs by setting a number of GPUs, defining replicas for horizontal scaling, and enabling or disabling performance optimizations.

- 03

Enable speculative decoding

This technique improves the efficiency of text generation and decoding processes, typically increasing the throughput and improving the handling of uncertain or ambiguous input.

Hardware specs

- 01

HGX H200-141GB SXM

- NVIDIA HGX H200 architecture

- 141GB of VRAM HBM3

- GPU link: SXM5 NVLink

- Starting at $4.99/hour - 02

HGX H100-80GB SXM

- NVIDIA HGX H100 architecture

- 80GB of VRAM

- GPU link: SXM5 NVLink

- Starting at $3.36/hour - 03

A100-80GB SXM

- NVIDIA A100 architecture

- 80GB of VRAM

- GPU link: SXM4 NVLink

- Starting at $2.59/hour - 04

A100-80GB PCIE

- NVIDIA A100 architecture

- 80GB of VRAM

- GPU link: PCIE

- Starting at $2.40/hour - 05

A100-40GB SXM

- NVIDIA A100 architecture

- 40GB of VRAM

- GPU link: PCIE

- Starting at $2.40/hour - 06

L40-48GB PCIE

- NVIDIA L40 architecture

- 48GB of VRAM

- GPU link: PCIE

- Starting at $1.49/hour