An end-to-end platform for your AI

No matter your starting point, we offer high-performance, scalable, developer-friendly tools to power your AI projects.

What we offer

Together AI offers cutting-edge products to power AI for your application.

We have the fastest performance, effortless horizontal scalability, easy-to-use developer tools, and an expert team that’s excited to work closely with you.

We’ll make quick work of solving problems together and deploy at the scale of your enterprise.

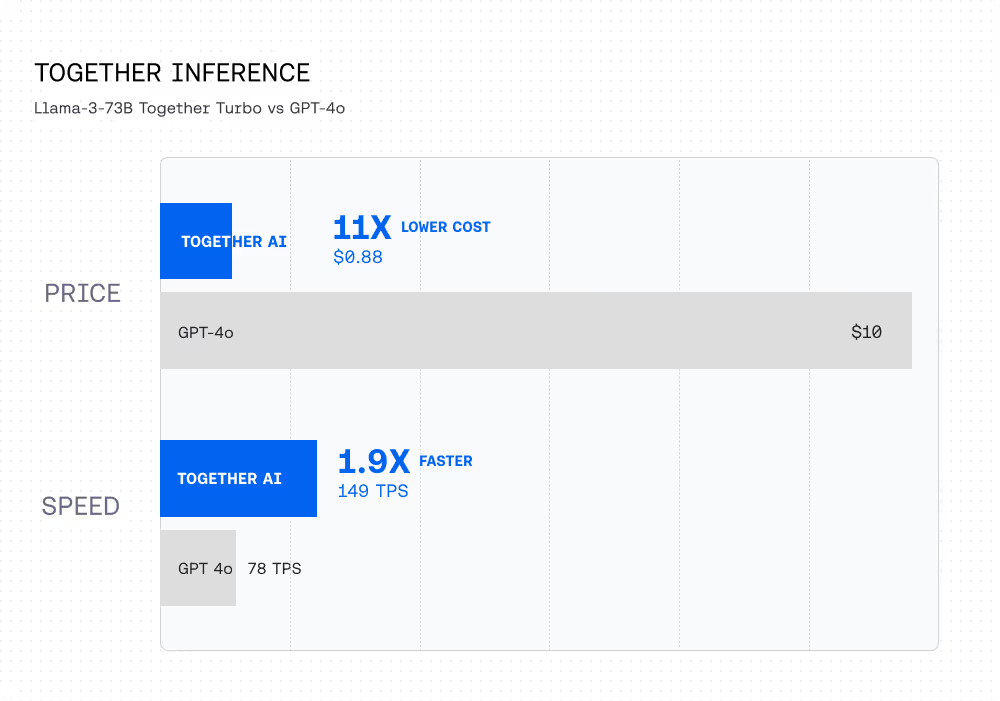

unmatched performance

Learn moreOur research and innovations bring next-level efficiencies in training and inference that can scale with your needs. Together Inference Engine is the fastest inference stack available.

Built to scale with you

We’ve built a horizontally scalable platform that is optimized to deliver the highest performance while scaling to meet your traffic.

designed for rapid integration

Read our docsAnd when you’re ready to bring your model into your apps, integration is snappy. With our easy-to-use API, your fine-tuned model can be seamlessly integrated into business processes in a matter of days.

world-class support

Contact usWe understand what it takes to train AI models to meet business goals. Our team can help you prepare your datasets, optimize them for accuracy, train your own private AI model, and deploy it in a scalable way – all to drive measurable results for your business.

collaborate

Contact usShare fine-tuned models across your team, collaborate on testing, analyze usage from team members, and set up API keys for each phase of your application development.

SPEED RELATIVE TO VLLM

LLAMA-3 70B

relative to GPT-4o

We have clusters available for you

Customer Stories

Together is the partner of choice for the worlds most innovative AI developers.

Latitude.io has built the new era of video games where the player is not limited to what the developer has pre imagined, but is dynamic. By leveraging the scalable and fast Together Inference service, Latitude.io addressed challenges in hosting large models, optimizing GPU deployments, and managing AI development costs.

- 37x

reduction in classifier costs

- 2x

size of free model

- ⅓

cost per token

Problem

Latitude.io faced challenges hosting LLMs at scale due to high infrastructure costs, complex GPU deployment management, and the need for scalable AI solutions. As 95% of gameplay is driven by AI models, these hurdles affected their ability to drive gamer experiences to their high ambitions.

Solution

Together Inference allowed Latitude.io to keep latency low while increasing total daily tokens by 8x. Receiving immediate access to the latest models such as Meta Llama 3 and Mixtral at low costs, Latitude.io was able to improve model quality and achieve longer context lengths. They also spent 80% less time managing GPU deployments leading to significant cost savings while being assured of their cluster’s health.

Result

Latitude.io tripled their average input tokens per request, resulting in improved player value. In addition, their average requests per user per day have doubled, further establishing the player’s acknowledgement of these improvements. While using Together AI, Latitude has increased their user value and engagement as well as reinforced their core mission: giving back to the player.

"We have tripled our average input tokens per request which directly translates into increased player value, since more context achieves more coherence in AI responses. Our average requests per user per day have also doubled"

.png)

Cartesia is on a mission to build real-time intelligence for every user starting with their pioneering work on state space models (SSMs). They needed a low-latency inference solution and also wanted the flexibility to optimize for latency, throughput, and cost.

- 135 ms

model latency

- 2x

cost reduction

- <2

weeks onboarding new custom model

Problem

Cartesia wanted a partner with a deep understanding of the inference stack for new model architectures. While latency was important to create seamless user experience, they also wanted the flexibility to optimize between latency, throughput and cost for their inference deployment

Solution

The ability for Cartesia to use Together AI with their own custom model enabled them to serve their state-of-the-art custom state space model, Sonic, in less than 2 weeks. With Together AI, Cartesia was able to optimize for real-time inference with industry leading latency of <200 ms and 2x faster performance while maintaining the highest accuracy; all at half the cost of other providers.

Result

Cartesia was able to achieve the industry’s fastest text-to-voice performance with <200 ms end-to-end latency and 135 ms model latency to provide real-time inference to their users. With their cutting-edge technology and the fast Together AI service, they achieved lower cost and real-time text-to-voice generations, passing on these benefits to their end users.

"The expertise Together AI has in optimizing model serving at scale helped us bring our model to production in record time. The ability to use Together AI with custom models is a huge unlock for companies developing their own models."

Vals.ai is building a third-party review system to evaluate AI model performance in different industries such as accounting, law, and finance. By choosing Together AI to run their eval suite they have been able to achieve high throughput and efficiency for millions of API calls, enabling them to test new models and add them to their leaderboard on the same day they're released.

- 1< minute

to integrate new models

- 0

rate limits hit for evals

- 20M

API calls

Problem

Vals.ai needed an AI platform to run their eval suite on a variety of industries and across multiple benchmarks. They didn’t want to provision compute to host each new model themselves for testing. Instead, they wanted to use a provider that had high throughput, was reliable, and hosted several models as soon as they were available to ensure their benchmarks are kept up to date.

Solution

Since Vals.ai has made Together AI their default provider for all their open source model evaluations, they have been able to efficiently and affordably run many evals across multiple industries. Additionally, since Together AI is so agile in incorporating new OSS models, Vals.ai have been able to test models like Llama-3 on the same day they are released.

Result

Vals.ai has been able to run ~ 320k API calls, 200M tokens in a single day on Together AI while keeping their costs low and steady. Due to unprecedented low latency they have also been able to run evaluations very efficiently which has become one their company’s biggest value adds.

“Our ability to rapidly test new models has been significantly augmented by the Together Platform. I can integrate and evaluate new models in just a few lines of code.”

Pika Labs, a video generation company founded by two Stanford PhD students, built its text-to-video model on Together GPU Clusters. As they got traction, Pika built new iterations of the model from scratch with Together GPU Clusters, and they scaled their inference volume as they grew to millions of videos generated per month.

- $1.1 million

Saved over 5 months

- 4 hours

Time to training start

- 392,300

Discord users

Problem

Needed efficient compute capacity that scaled from prototype to production. Having fast and efficient performance for training was a must. They needed to move quickly – they didn’t have time to worry about setting up their own training infrastructure and they needed a partner who could scale with their difficult-to-forecast traffic.

Solution

Pika used Together Inference API to rapidly prototype using the easy-to-use open-source model library. Once the team decided to build their own models from the ground up, they opted for the unparalleled compute power of Together GPU Clusters. And once they launched the product and saw user traction grow exponentially, Pika scaled inference seamlessly.

Results

Pika grew to millions of videos generated per month with the top users spending ~10 hours per day on the platform — all within 6 months of being founded.

“Together GPU Clusters provided a combination of amazing training performance, expert support, and the ability to scale to meet our rapid growth to help us serve our growing community of AI creators.”

Upstage is a leading LLM company specializing in customized, domain-specific models, and the builder of top-ranked models like Solar. With Together Inference, they were able to make their Solar model available to a wide audience including Together API customers, Poe.com users, and their own customers.

- 2.8 million

peak token volume per hour

- 45 tokens per second

Together AI TPS for SOLAR v0 (70B)

Problem

Upstage needed to host Solar, their most popular LLM, so that it could be used by the widest possible audience. When the model charted on the Hugging Face Open LLM Leaderboard, they also needed a place that could scale to handle high traffic while maintaining fast performance and cost efficiency.

Solution

Upstage chose Together Inference serverless endpoints to host their model because of the user-friendly interface of the API, its competitive pricing, and Together AI’s expert support that made bringup super easy.

Result

The Solar model was deployed on Together Inference, and published on Poe.com. Together Inference easily scaled to serve over 2.8 million peak tokens per hour with exceptional performance — over 45 tokens per second. The Upstage team expanded their partnership and integrating Together AI into their own service.

"We chose Together AI for their competitive pricing, user-friendly interface, and quick service. Truly, it offers an exceptional service experience. I was particularly impressed when their CEO, Vipul, personally jumped in to help with technical questions."

Wordware, founded by Cambridge University ML experts Robert Chandler and Filip Kozera, enables seamless collaboration between domain experts and engineers, emphasizing a 'prompt first' approach to building LLM applications. This unique method helps create diverse AI-powered experiences, ranging from simple workflows to intricate agents.

- 4 models

Integrated into Wordware's platform

- 16x

Cost reduction for AI-powered NPCs

- 3-4 Hours

Time to integrate multiple models

Wordware's mission is to enhance the machine learning workflow by removing the dependency on extensive 'ground truth' datasets. Their platform empowers domain experts to quickly refine prompts, improving collaboration and speeding up iterations. Wordware wanted to focus on building the best collaborative web-based IDE for language model programming with seamless model selection and not on the hassle of managing expensive infrastructure.

Wordware adopted Together's infrastructure for its versatility and user-friendly interface. The ability to rapidly prototype and scale using Together's Inference API and the powerful compute capabilities of the service was integral to their progress. The platform's low latency, minimal cold start times, and cost-effectiveness allowed Wordware to experiment with various models, enabling their customers to transition from GPT-4 to Mistral, leading to significant cost reductions, enhanced reliability and reduced latency.

Wordware's innovative approach has led to groundbreaking applications. One notable customer example is the development of AI-powered NPC interactions, in which the cost of operation was reduced by 16x after transitioning to Wordware. This efficiency is attributed to Wordware's token-based pricing and the ability to integrate multiple models seamlessly, like Mistral and OpenChat, offering a unique balance of speed, flexibility, and cost-effectiveness, which Wordware attributes to Together’s API.

"I love the flexibility Together AI provides, from serverless inference endpoints to easy fine-tuning and hosted deployments. We like working with a company who knows what they’re doing. With Together AI, downtime is low and throughput is amazing. That matters so much for us and our end-customers.”

Nexusflow, a leader in generative AI solutions for cybersecurity, relies on Together GPU Clusters to build robust cybersecurity models as they democratize cyber intelligence with AI.

- 40%

Cost savings per month

- <90 minutes

Onboarding time

- Zero

Downtime

Problem

To enhance the capabilities of existing base models with public data, Nexusflow required a cost-effective, reliable, and scalable compute partner. Traditional cloud providers were not able to simultaneously offer the cost-efficiency and the level of guaranteed availability that Nexusflow needed to scale their specialized workloads.

Solution

The team at Nexusflow opted for Together GPU Clusters, seeing it as the perfect "trifecta" in terms of contract length, pricing, and compute availability. They utilized GPUs suitable for their specific workload requirements, and benefited from the unparalleled support that Together’s expert team offers.

Results

Nexusflow completed the onboarding process in <90 minutes and was able to run workloads. Initial hiccups were resolved by Together's support team, ensuring a smooth experience. Nexusflow managed to cut their R&D cloud compute costs by 40%, while experiencing faster response times and lower latency in technical support than other cloud providers.

“In an industry where time and specialized capabilities can mean the difference between vulnerability and security, Together GPU Clusters has helped us scale compute resources quickly in a cost-effective way. Their high-performance infra and top-notch support lets us focus on building state-of-the-art generative AI solutions for cybersecurity."

Arcee AI is a fast-growing startup focused on training specialized small language models (SLMs) tailored for specific tasks. Built on top of these models are Arcee Conductor and Arcee Orchestra, two products designed to simplify enterprise AI.

- 95%

Reduction in latency

- 41+

QPS at 32 concurrent requests

Problem

Arcee AI's EKS setup presented significant challenges with demanding engineering resources, specialized Kubernetes expertise, difficult GPU auto scaling, and multi-year commitments.

Solution

Arcee AI made a strategic decision to migrate its inference workloads to Together Dedicated Endpoints, which provided a truly managed GPU deployment—eliminating the need for in-house infrastructure work while delivering greater flexibility and a more price-competitive solution.

Results

Besides a major simplification of its infrastructure, Arcee AI experienced up to 95% reduction in latency, and exceeded throughput requirements with 41+ queries per second at 32 concurrent requests. This also opened the door for a deeper partnership between Arcee AI and Together AI.

"The migration was a very, very simple process. We put our models in a private Hugging Face repository, the Together AI team pulled them down and handed us the API, and we just plugged that into our app. That’s what we wanted–a fully managed experience. Everything has been great: full availability, no downtime."

Why open-source

Open source models are best choice for your company. They are faster, more customizable, and more private.

PERFORMANCE

Explore 100+ modelsThese models were developed by research communities at leading institutions across the globe including Google, Meta, Open AI, and Stanford. With these models, you’ll get high accuracy, fast performance, and the ability to fine-tune the model to your specific needs.

PRIVACY

When you take a cutting-edge open-source model from Together AI and train it with your own private data, you’ll create a fine-tuned model that is completely yours – a private, proprietary tool that your company owns. Together AI enables you to do this in a fully private manner on Together Cloud, or in your existing Virtual Private Cloud. This means none of your private data is exposed to the world or used to improve someone else’s model.

transparency

Contact usThis puts you and your developers in the driver’s seat. And it enables you to show your model review board, security team, and executives everything they need to green light deploying generative AI in your application. At Together AI, we care about transparency so that we can give you more control. Let us help you understand the powerful tools at your fingertips.

control

You can fully fine-tune any open-source model. You can adjust every layer in the model. You don’t have to update the model on someone else’s schedule. You control what and when you deploy. Your developers will thank you.

Industries & use cases

Speed up your business processes, organize millions of documents, forecast demand for products, develop a conversational chatbot for your sales team — and so much more.

Harness the power of AI applications that are customized to you.

Defect detection

Boost quality control in a production process -- automate visual inspection by identifying missing components using computer vision.

Text and data extraction

Extract and collate critical information from millions of documents at high speed.

Sentiment analysis

Understand the sentiment of words, sentences, paragraphs, or documents. Tune to your subject matter and language style for a high degree of precision.

News analysis

Pull names, events, and more from news so you can drive insights and make decisions.

Machine condition detection

Assess the condition of your machines through sensor data.

Image and video analysis

Automate editing workflows, catalog your assets and extract meaning from your images and videos.

Forecast business metrics

Create prediction models to forecast your business needs using your data.

Interaction analytics

Remove friction and improve customer journeys with deeper understanding of interactions across channels.

Script-writing

Generate creative starting points for books, movies, or other media. Leverage an AI co-pilot to help with editing scripts and creating a consistent tone.

Text to speech

Generate high quality, natural speech from any text.

Document intelligence

Identify, extract and organize custom data from complex documents to reduce manual operations and improve workflows. Extract clauses, dates, parties, and other custom entities from documents with ease.

Text and speech translation

Automatically translate text or speech between over 100 languages.

Insights and analysis

Extract understanding and insights from unstructured text, and output in a structured form for use in a variety of formats (bullets, tables, sentences, or JSON).

Product summarization

Automate product titles and descriptions at scale, customizing to different regions or audiences to maximize engagement and SEO.

Image, video, and audio generation

Generate high quaility images, video, and audio from text prompts.

Personalization

Enhance the user experience by customizing content to each individual user.

Data Audit

Detect and identify root causes of unexpected changes in metrics such as revenue and retention.

Code generation and understanding

Understand code in dozens of languages, summarize check-ins, identify bugs or issues, and automate code review processes.

Named entity recognition

Identify and extract known entities from bodies of text efficiently and accurately.

Customized document classification

Improve document classification by using features unique to your data.

Chatbots & virtual agents

Communicate with your end users 24x7 with natural language. Add an intelligent conversational layer to any application-- customer support, sales, internal devops, legal assistant, coding co-pilot, social chat and more. Easily extend to email, chat, and voice applications.

Summarization

Efficiently summarize a few paragraphs -- or whole documents.

Automatic speech recognition

Add a voice interface to any product interface or feature to allow your users to interact with your application more efficiently or in new modalities, e.g. driving, hands free.