Deploy NVIDIA GPU clusters at scale

Forge the AI frontier with large-scale NVIDIA Blackwell GPU clusters, turbocharged by Together AI research.

AI Acceleration Cloud for training and inference

Built by AI researchers for AI innovators, Together GPU Clusters are powered by NVIDIA GB200, B200, H200, and H100 GPUs, along with the Together Kernel Collection — delivering up to 24% faster training operations.

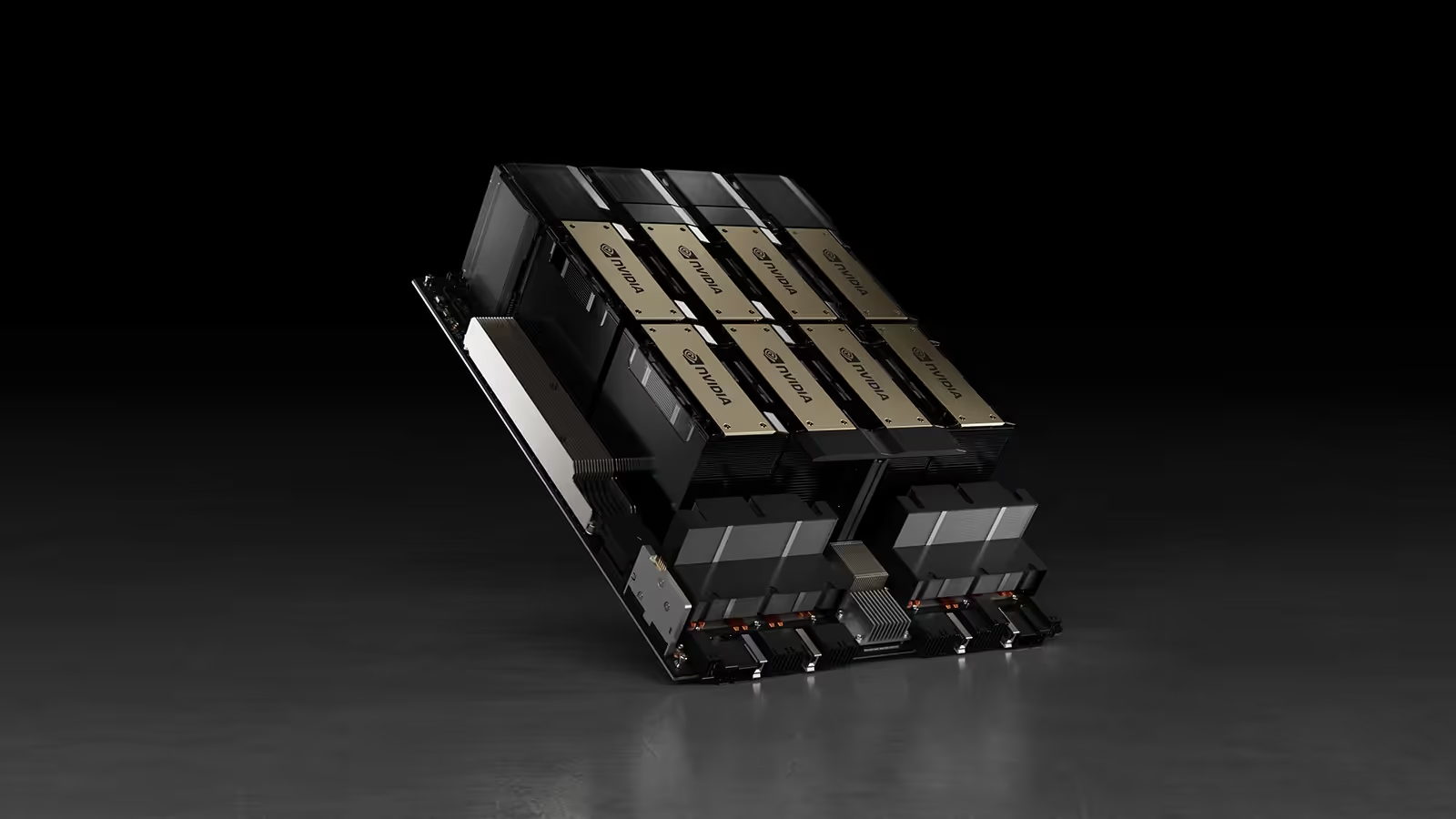

Top-Tier NVIDIA Hardware

16 → 100K+ NVIDIA GPUs, like GB200, B200, H200, and H100, interconnected with InfiniBand and NVLink for peak AI training performance.

Accelerated Software

The Together Kernel Collection, from our Chief Scientist and FlashAttention creator Tri Dao, provides up to 10% faster training and 75% faster inference.

Expert AI Advisory Services

Together AI’s expert team offers consulting for custom model development and scalable training best practices.

"Together GPU Clusters provided a combination of amazing training performance, expert support, and the ability to scale to meet our rapid growth to help us serve our growing community of AI creators."

- Demi Guo, CEO

Large scale NVIDIA Blackwell clusters, custom built for you

As an NVIDIA Cloud Partner, we have massive clusters ready for you right now, and can also work with you to build GPU Clusters specific to your project needs.

NVIDIA GB200 NVL72: A rack-scale, liquid-cooled supercomputer that enables 72 NVIDIA Blackwell GPUs to act as one massive GPU, delivering 1.4 exaFLOPs of AI performance and up to 30TB of fast memory.

NVIDIA Blackwell GPU: delivering up to 15X more real-time inference and 3X faster training to accelerate trillion-parameter language models compared to the NVIDIA Hopper architecture generation.

NVIDIA HGX H200: 1.1TB of HBM3e across 8 Hopper GPUs with 7.2TB/s of total aggregate bandwidth, nearly doubling the memory capacity and offering 1.4 times more memory bandwidth than HGX H100.

NVIDIA HGX H100: delivering exceptional performance, scalability, and security for every workload.

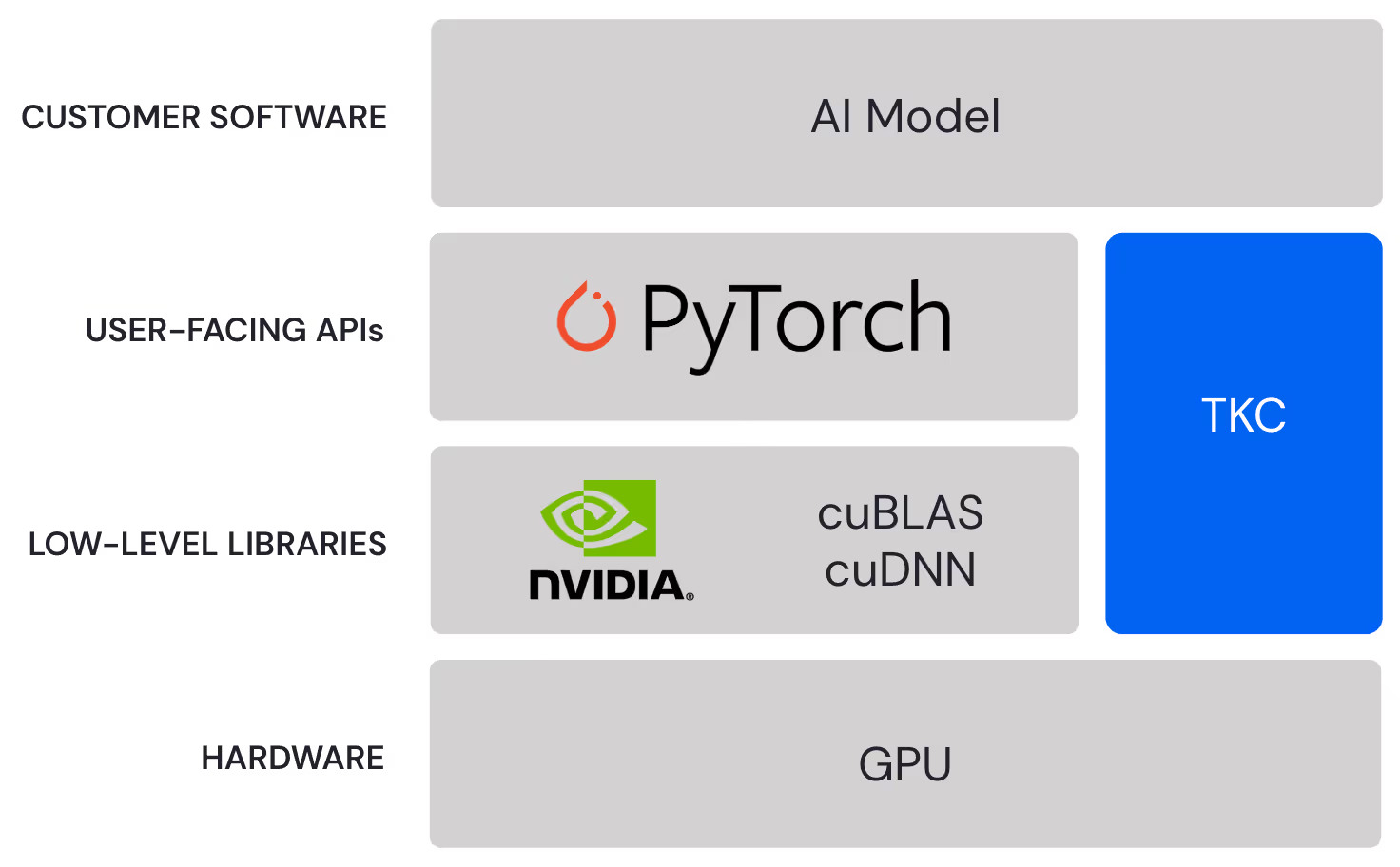

Together Kernel Collection (TKC)

10% Faster Training: TKC provides 10% faster training times across common AI models, with optimized kernels for multi-layer perceptrons (MLPs) using SwiGLU activations.

75% Faster Inference: TKC offers an astounding 75% faster inference with FP8 kernels optimized for small matrices, outperforming standard PyTorch implementations.

Built for PyTorch: designed to integrate seamlessly with PyTorch, TKC provides significant speedups compared to standard libraries like cuBLAS and cuDNN.

Train Faster, Spend Less: By enhancing throughput and efficiency, TKC allows customers to complete training faster and process more data within the same GPU budget.

“Delivering competitive pricing, strong reliability and a properly set up cluster is the bulk of the value differentiation for most AI clouds. The only differentiated value we have seen outside this set is from

a Neocloud called Together AI where the inventor of FlashAttention, Tri Dao, works. We don't believe the value created by Together can be replicated elsewhere without cloning Tri.”

- Dylan Patel, Founder

Expert AI Advisory for Custom Model training

We combine powerful infrastructure with expert guidance to help you build and deploy state-of-the-art custom AI models, tailored to your unique needs.

Custom Data Design: Leverage advanced tools like DSIR and DoReMi to select and optimize high-quality data slices, incorporating insights from datasets like RedPajama-v2.

Optimized Training: Collaborate with our experts to design architectures and training recipes for specialized use cases like instruction-tuning or conversational AI.

Accelerated Training and Fine-Tuning: Achieve up to 9x faster training and 75% cost savings with our optimized training stack, including FlashAttention-3.

Comprehensive Model Evaluation: We help you benchmark your model on public datasets or custom metrics to ensure exceptional performance and quality.

"At Krea, we're building a next-generation creative suite that brings AI-powered visual creation to everyone. Together AI provides the performance and reliability we need for real-time, high-quality image and video generation at scale. We value that Together AI is much more than an infrastructure provider – they’re a true innovation partner, enabling us to push creative boundaries without compromise."

- Victor Perez, Co-Founder, Krea

Performance metrics

training horsepower

relative to aws

training speed

Built for Frontier AI

MASSIVE scale interconnected compute

Together GPU Clusters are designed to scale from under 100 GPUs, to frontier-class clusters with 1K → 100K+ GPUs. Interconnected via NVLink and Infiniband, our clusters of GPUs work together as one.

ai-native storage solutions

Together GPU Clusters integrate AI-native storage systems like VAST Data and WEKA alongside NVMe SSDs to ensure rapid read/write speeds. These solutions reduce latency for large datasets, improving training and inference efficiency.

Advanced orchestration,

right out of the box.Together GPU Clusters use Slurm and Kubernetes for efficient workload orchestration. Slurm can handle job scheduling for distributed training, while Kubernetes manages containerized inference, ensuring that GPU resources are optimally utilized.

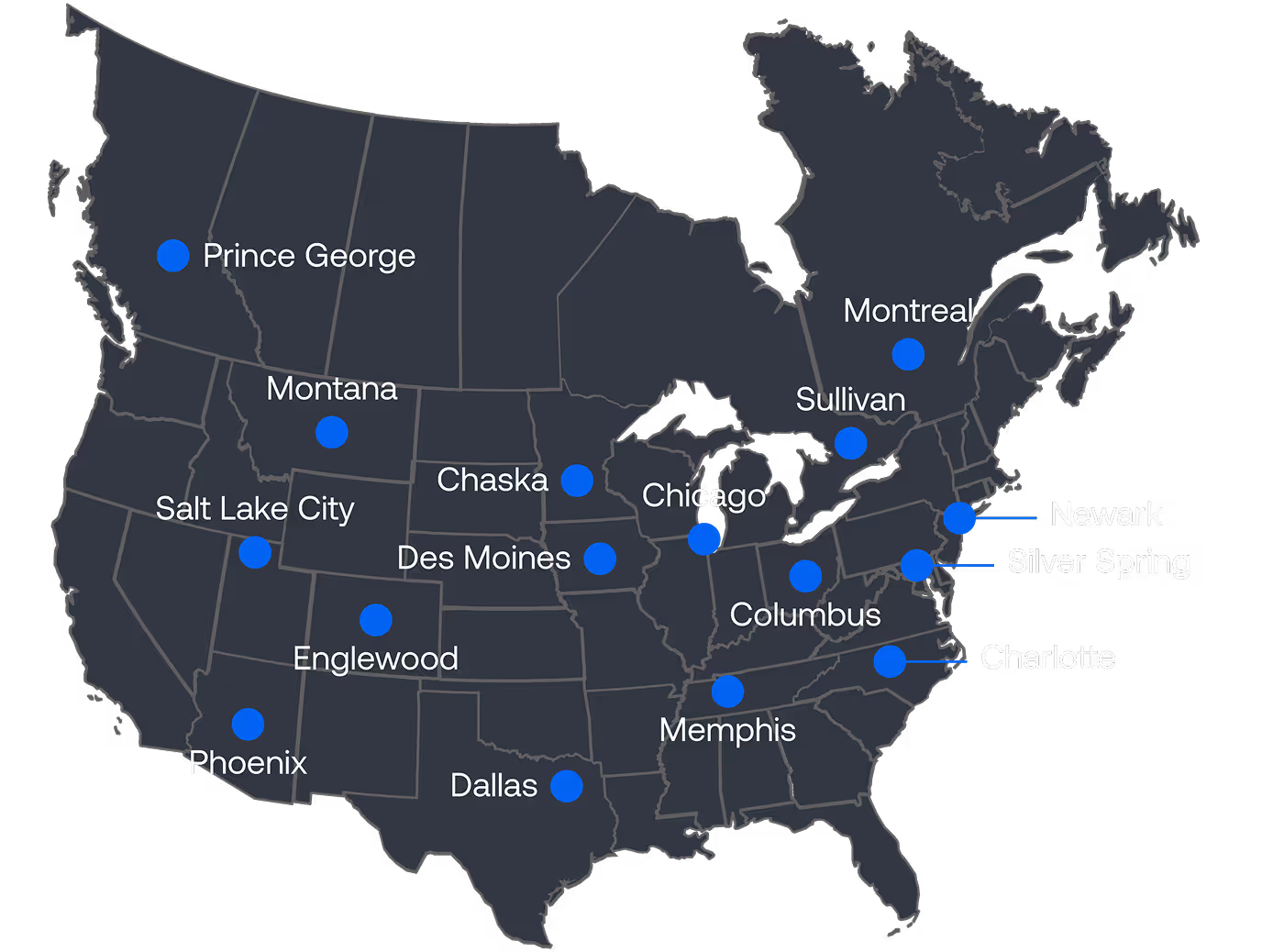

AI Data Centers and Power across North America

Data Center Portfolio

2GW+ in the Portfolio with 600MW of near-term Capacity.

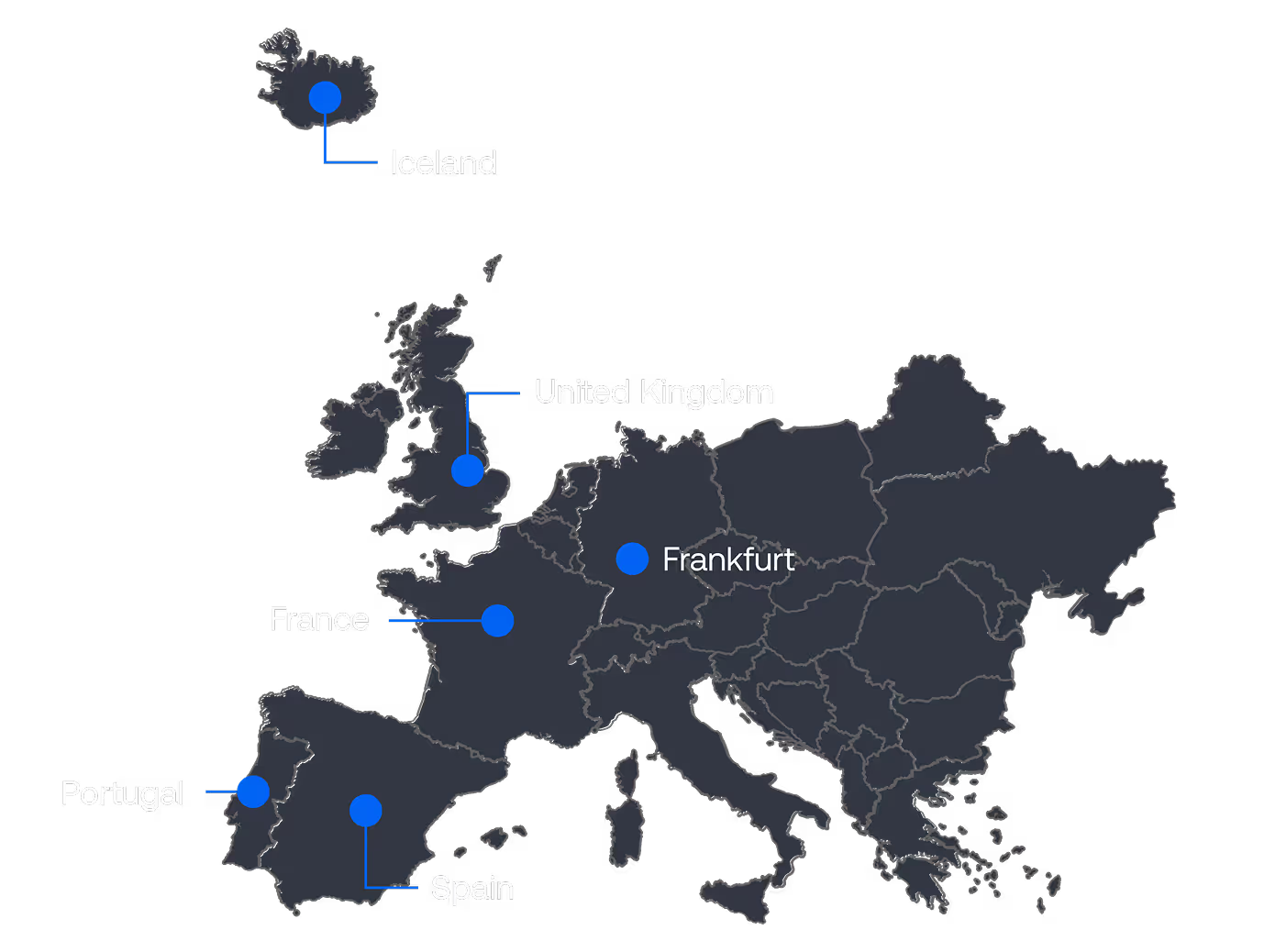

Expansion Capability in Europe and Beyond

Data Center Portfolio

150MW+ available in Europe: UK, Spain, France, Portugal, and Iceland also.

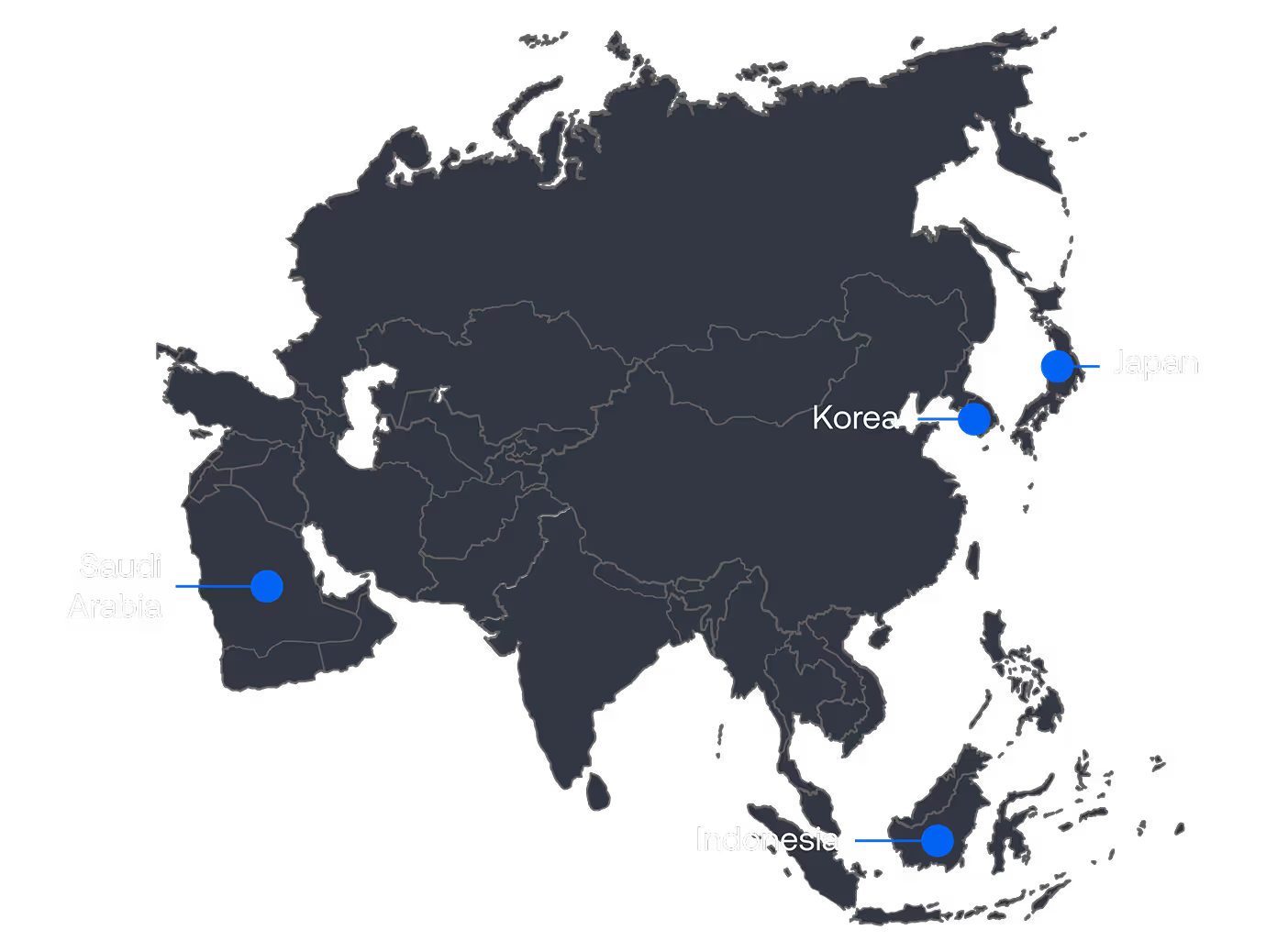

Next Frontiers – Asia and the Middle East

Data Center Portfolio

Options available based on the scale of the projects in Asia and the Middle East.

Hardware specs

- 01

GB200 NVL72 Rack

18x nodes with 2x "Bianca" GB200 Superchips, each with

- 2 Blackwell GPUs 384 GB HBM3e (total of 72x)

- 1 Grace CPU with 72 Arm Neoverse V2 cores (total of 36x)

- 14.4 Tbps Infiniband

- Up to 3PB high-performance converged storage - 02

B200 Cluster Node Specs

- 8x B200 180GB HBM3 SXM5 NVLink

- 112+ AMD or Intel cores

- 3.2 Tbps Infiniband

- 3TB or larger DDR5

- Up to 3PB high performance converged storage - 03

H200 Cluster Node Specs

- 8x H200 141GB HBM3 SXM5 NVLink

- 96-128 AMD or Intel CPU cores

- 3.2 Tbps Infiniband

- 1TB or 2TB DDR5

- Up to 3PB high-performance converged storage

- 04

H100 Cluster Node Specs

- 8x NVIDIA H100 80GB SXM5 NVLink

- 96-128 AMD or Intel CPU cores

- 3.2 Tbps Infiniband

- 1TB DDR5

- Up to 3PB high-performance converged storage - 05

A100 Cluster Node Specs

- 8x NVIDIA A100 80GB SXM4 NVLink

- 96-128 AMD or Intel CPU cores

- 1.6 Tbps Infiniband

- 1TB DDR5

- Up to 3PB high-performance converged storage

.avif)