Announcing General Availability of Together Instant Clusters, offering ready to use, self-service NVIDIA GPUs

Standing up multi‑node GPU clusters has historically been too manual and brittle — tickets, contract steps, and complex setup – taking valuable time away from AI engineers and researchers. Today, we are excited to announce the General Availability of Together Instant Clusters, offering an API-first developer experience. Instant Clusters deliver self‑service automation for AI infrastructure—from single‑node (8 GPUs) to large multi‑node clusters with hundreds of interconnected GPUs, with support for NVIDIA Hopper and NVIDIA Blackwell GPUs.

AI Native companies can now manage sudden demand, whether it’s a training run or increased inference traffic, adding capacity fast and bringing a cluster online automatically with the right orchestration (K8s or Slurm) and networking. Instant Clusters can be provisioned in minutes, without long procurement cycles or manual approvals, and preconfigured for low-latency inference and high-goodput distributed training.

“We train models to reason like clinicians over troves of multi-modal data. That means capturing subtle preferences, like how to resolve multiple diagnoses or align with payer-specific logic. With Together Instant Clusters, we can run large-scale reinforcement learning on clinical question sets, experiment rapidly, and distill that learning into smaller, more efficient models that often outperform much larger foundation models.” - Allan Bishop, Founding Engineer, Latent Health

Cloud Ergonomics for GPU Clusters

Developers expect the cloud to be API‑first, self‑service, and predictable. Historically, tightly networked GPU clusters haven’t felt that way — teams pieced together drivers, schedulers, and fabric by hand. Together Instant Clusters make GPU infrastructure feel like the rest of the cloud: automated from request to run, consistent across environments, and designed to scale from a single node to large multi‑node clusters — without changing how you work.

Self‑service, ready in minutes

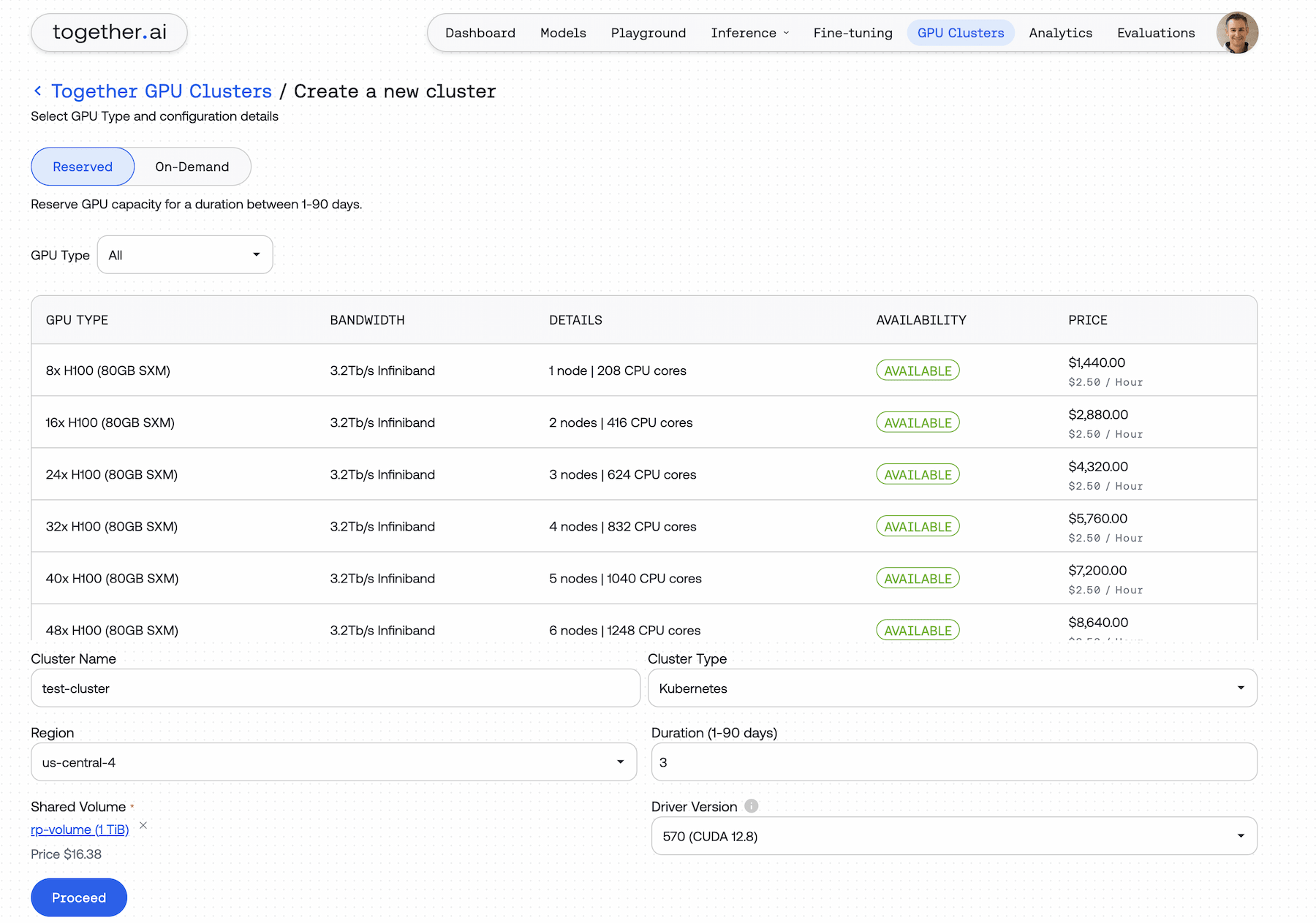

Provision through console, CLI, or API, and integrate with Terraform or SkyPilot for IaC and multi‑cloud workflows. Choose and lock NVIDIA driver/NVIDIA CUDA versions, bring your own container images, attach shared storage, and be ready to run in minutes.

Batteries included

Clusters come pre-loaded with the components teams usually spend days wiring up themselves:

- GPU Operator to manage drivers and runtime software.

- Ingress controller to handle traffic into your cluster.

- NVIDIA Network Operator for high-performance NVIDIA Quantum InfiniBand and NVIDIA Spectrum-X Ethernet with RoCE networking.

- Cert Manager for secure certificates and HTTPS endpoints.

These and other essentials are already in place, so your cluster is production-ready out of the box.

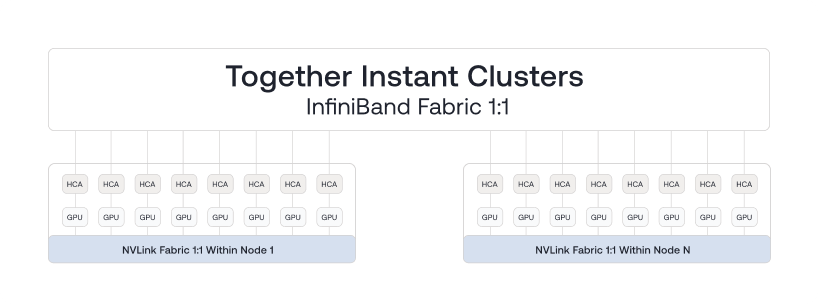

Optimized for Distributed Training

Training at scale demands the right interconnect and orchestration. Clusters are wired with non‑blocking NVIDIA Quantum‑2 InfiniBand scale-out compute fabric across nodes and NVIDIA NVLink and NVLink Switch inside the node, delivering ultra‑low‑latency, high‑throughput communication for multi‑node training.

Run with Kubernetes or Slurm (SSH when you need it), keep environments reproducible with version‑pinned drivers/CUDA, and checkpoint to shared storage—high‑bandwidth, parallel storage colocated with your compute; durable, resizable, and billed on demand. Ideal for pre‑training, reinforcement learning, and multi‑phase training schedules.

Recommendation: for tightly coupled distributed training, choose NVIDIA HGX H100 for maximum bandwidth within and between nodes; NVIDIA Blackwell is also available for next‑gen training as capacity expands.

Scalable Burst Capacity for Production Inference

When usage surges, services need to burst — not re‑architect. Use Together Instant Clusters to add inference capacity quickly and keep latency SLAs intact. Deploy your serving stack on clusters sized for the moment, resize clusters as user traffic spikes or subsides, and keep one operational model from test to production.

Recommendation: for elastic serving, our NVIDIA HGX H100 Inference plan offers strong cost‑performance and broad framework support. For inference workflows tied to training data/weights, use shared storage and the same environment image for consistency. SkyPilot makes it easy to burst across clouds with one job spec when needed.

Reliable at Scale

Training on large GPU clusters leaves no room for weak links—a bad NIC, miswired cable, or overheating GPU can stall jobs or quietly degrade results. With General Availability, we’ve put in place a full reliability regimen so clusters are solid before a job starts and remain stable throughout. Every node undergoes burn-in and NVLink/NVSwitch checks; inter-node connections are validated with NCCL all-reduces; and reference training runs confirm tokens/sec and Model FLOPs Utilization (MFU) targets. Once deployed, clusters are continuously monitored: idle nodes re-run tests, 24/7 observability flags anomalies in real time, and SLAs with fast communication and fair compensation ensure issues are addressed transparently.

Built for AI natives (including Together's own researchers)

During preview, AI Native companies used Instant Clusters to accelerate their work:

“As an AI Lab, we regularly train a range of models — from large language models to multimodal systems — and our workloads are highly bursty. Together Instant Clusters let us spin up large GPU clusters on demand for 24–48 hours, run intensive training jobs, and then scale back down just as quickly. The ability to get high-performance, interconnected NVIDIA GPUs without the delays of procurement or setup has been a game-changer for our team’s productivity and research velocity.” - Kunal Singh, Lead Data Scientist, Fractal AI research Lab

Together AI is unique among AI cloud providers: a significant portion of our team are AI researchers. Our researchers both use and contribute to the platform itself; Instant Clusters are a direct result of that feedback, built for teams pushing frontier AI and the pioneers training frontier‑scale models.

Our own Tri Dao, creator of FlashAttention, had this to say:

“The limiter isn’t just GPU peak FLOPs; it’s how fast we can get a GPU cluster to start. If we can spin up a clean NVIDIA Hopper GPU or NVIDIA Blackwell GPU cluster with good networking in minutes, our researchers can spend more cycles on data, model architecture, system design, and kernels. That’s how we optimize research velocity.” — Tri Dao, Together AI Chief Scientist

Pricing

Simple, straightforward pricing — with no commitments or surprise fees. Choose the term that fits your run: Hourly, 1–6 Days, or 1 Week–3 Months. Prices below are shown in $/GPU-hour.

Storage & data

- Shared storage: $0.16 per GiB‑month (high‑performance, long‑lived, resizable).

- Data transfer: free egress and ingress.

Get Started Today

Together Instant Clusters with NVIDIA Blackwell and Hopper GPUs are available now.

Get started today:

- Create a cluster through the Together AI console

- Read the documentation

LOREM IPSUM

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

LOREM IPSUM

Audio Name

Audio Description

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

Value Prop #1

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

List Item #2

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

List Item #3

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

Build

Benefits included:

✔ Up to $15K in free platform credits*

✔ 3 hours of free forward-deployed engineering time.

Funding: Less than $5M

Grow

Benefits included:

✔ Up to $30K in free platform credits*

✔ 6 hours of free forward-deployed engineering time.

Funding: $5M-$10M

Scale

Benefits included:

✔ Up to $50K in free platform credits*

✔ 10 hours of free forward-deployed engineering time.

Funding: $10M-$25M

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, respond only in Arabic, no other language is allowed. Here is the question:

Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May?

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, respond with less than 860 words. Here is the question:

Recall that a palindrome is a number that reads the same forward and backward. Find the greatest integer less than $1000$ that is a palindrome both when written in base ten and when written in base eight, such as $292 = 444_{\\text{eight}}.$

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, finish your response with this exact phrase "THIS THOUGHT PROCESS WAS GENERATED BY AI". No other reasoning words should follow this phrase. Here is the question:

Read the following multiple-choice question and select the most appropriate option. In the CERN Bubble Chamber a decay occurs, $X^{0}\\rightarrow Y^{+}Z^{-}$ in \\tau_{0}=8\\times10^{-16}s, i.e. the proper lifetime of X^{0}. What minimum resolution is needed to observe at least 30% of the decays? Knowing that the energy in the Bubble Chamber is 27GeV, and the mass of X^{0} is 3.41GeV.

- A. 2.08*1e-1 m

- B. 2.08*1e-9 m

- C. 2.08*1e-6 m

- D. 2.08*1e-3 m

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, your response should be wrapped in JSON format. You can use markdown ticks such as ```. Here is the question:

Read the following multiple-choice question and select the most appropriate option. Trees most likely change the environment in which they are located by

- A. releasing nitrogen in the soil.

- B. crowding out non-native species.

- C. adding carbon dioxide to the atmosphere.

- D. removing water from the soil and returning it to the atmosphere.

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, your response should be in English and in all capital letters. Here is the question:

Among the 900 residents of Aimeville, there are 195 who own a diamond ring, 367 who own a set of golf clubs, and 562 who own a garden spade. In addition, each of the 900 residents owns a bag of candy hearts. There are 437 residents who own exactly two of these things, and 234 residents who own exactly three of these things. Find the number of residents of Aimeville who own all four of these things.

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, refrain from the use of any commas. Here is the question:

Alexis is applying for a new job and bought a new set of business clothes to wear to the interview. She went to a department store with a budget of $200 and spent $30 on a button-up shirt, $46 on suit pants, $38 on a suit coat, $11 on socks, and $18 on a belt. She also purchased a pair of shoes, but lost the receipt for them. She has $16 left from her budget. How much did Alexis pay for the shoes?

article