Models / Chat / Gryphe MythoMax L2 Lite (13B) API

Gryphe MythoMax L2 Lite (13B) API

LLM

Experimental merge of MythoLogic-L2 and Huginn using tensor intermingling for enhanced front and end tensor integration.

Try our Gryphe MythoMax API

API Usage

How to use Gryphe MythoMax L2 Lite (13B)Model CardPrompting Gryphe MythoMax L2 Lite (13B)Applications & Use CasesGryphe MythoMax L2 Lite (13B) API Usage

Endpoint

Gryphe/MythoMax-L2-13b-Lite

RUN INFERENCE

curl -X POST "https://api.together.xyz/v1/chat/completions" \

-H "Authorization: Bearer $TOGETHER_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "Gryphe/MythoMax-L2-13b-Lite",

"messages": [{"role": "user", "content": "What are some fun things to do in New York?"}]

}'

JSON RESPONSE

RUN INFERENCE

from together import Together

client = Together()

response = client.chat.completions.create(

model="Gryphe/MythoMax-L2-13b-Lite",

messages=[{"role": "user", "content": "What are some fun things to do in New York?"}],

)

print(response.choices[0].message.content)

JSON RESPONSE

RUN INFERENCE

import Together from "together-ai";

const together = new Together();

const response = await together.chat.completions.create({

messages: [{"role": "user", "content": "What are some fun things to do in New York?"}],

model: "Gryphe/MythoMax-L2-13b-Lite",

});

console.log(response.choices[0].message.content)

JSON RESPONSE

Model Provider:

Gryphe

Type:

Chat

Variant:

Lite

Parameters:

13B

Deployment:

✔ Serverless

Quantization

Context length:

4K

Pricing:

$0.10

Run in playground

Deploy model

Quickstart docs

Quickstart docs

How to use Gryphe MythoMax L2 Lite (13B)

Model details

Prompting Gryphe MythoMax L2 Lite (13B)

Applications & Use Cases

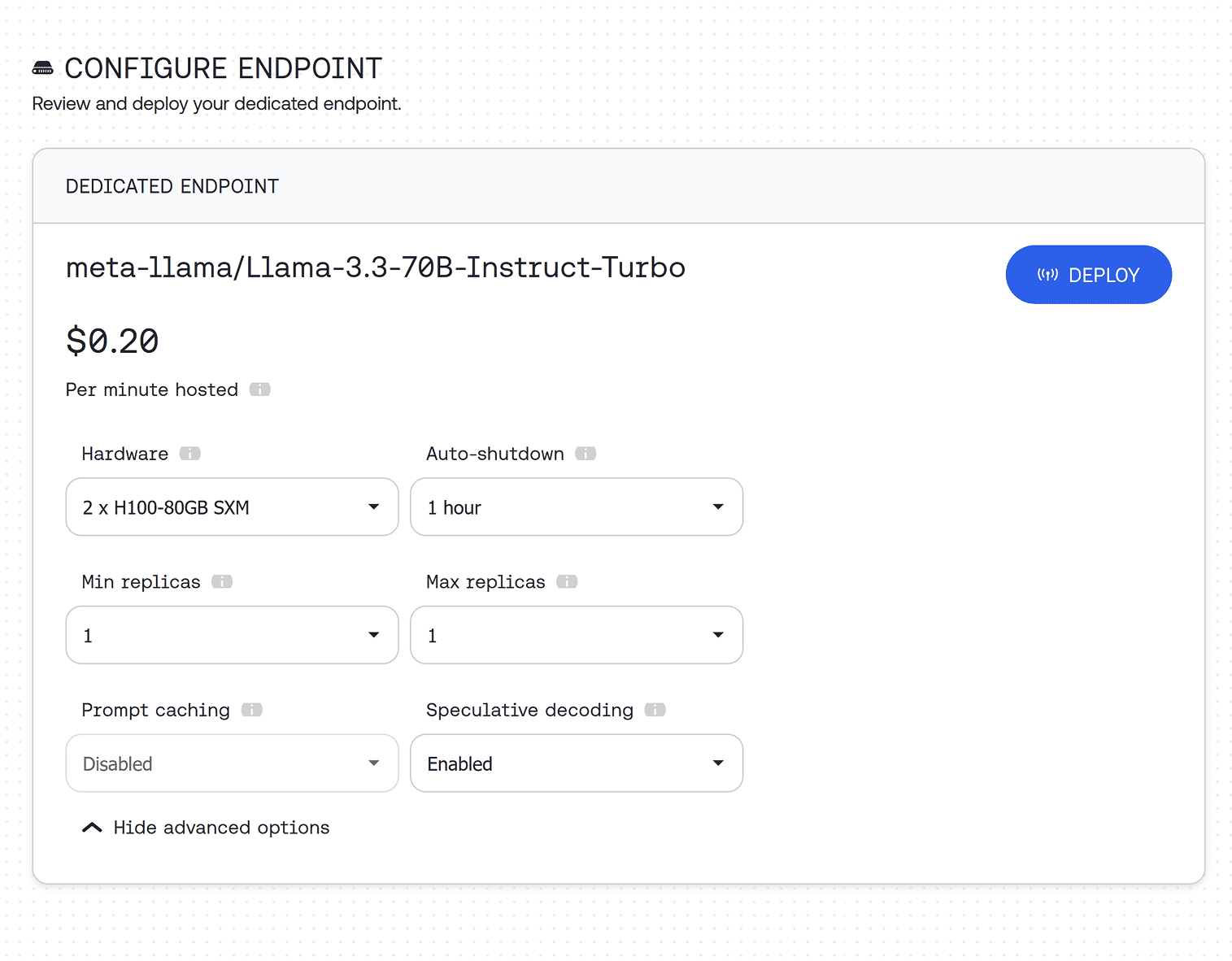

Looking for production scale? Deploy on a dedicated endpoint

Deploy Gryphe MythoMax L2 Lite (13B) on a dedicated endpoint with custom hardware configuration, as many instances as you need, and auto-scaling.