Models / Embeddings / BGE-Large-EN v1.5 API

BGE-Large-EN v1.5 API

Embeddings

BAAI v1.5 large maps text to dense vectors for retrieval, classification, clustering, semantic search, and LLM databases.

Read our Docs

BGE-Large-EN v1.5 API Usage

Endpoint

BAAI/bge-large-en-v1.5

RUN INFERENCE

curl -X POST "https://api.together.xyz/v1/embeddings" \

-H "Authorization: Bearer $TOGETHER_API_KEY" \

-H "Content-Type: application/json" \

-d {

"model": "BAAI/bge-large-en-v1.5",

"input": "Our solar system orbits the Milky Way galaxy at about 515,000 mph"

}'JSON RESPONSE

RUN INFERENCE

from together import Together

client = Together()

response = client.embeddings.create(

model="BAAI/bge-large-en-v1.5",

input="Our solar system orbits the Milky Way galaxy at about 515,000 mph",

)

print(response.data[0].embedding)JSON RESPONSE

RUN INFERENCE

import Together from "together-ai";

const together = new Together();

const response = await together.embeddings.create({

model: "BAAI/bge-large-en-v1.5",

input: "Our solar system orbits the Milky Way galaxy at about 515,000 mph",

});

console.log(response.data[0].embedding);JSON RESPONSE

Model Provider:

BAAI

Type:

Embeddings

Variant:

Large

Parameters:

335M

Deployment:

✔ Serverless

Quantization

Context length:

512

Pricing:

$0.02

Run in playground

Deploy model

Quickstart docs

Quickstart docs

How to use BGE-Large-EN v1.5

Model details

Prompting BGE-Large-EN v1.5

Applications & Use Cases

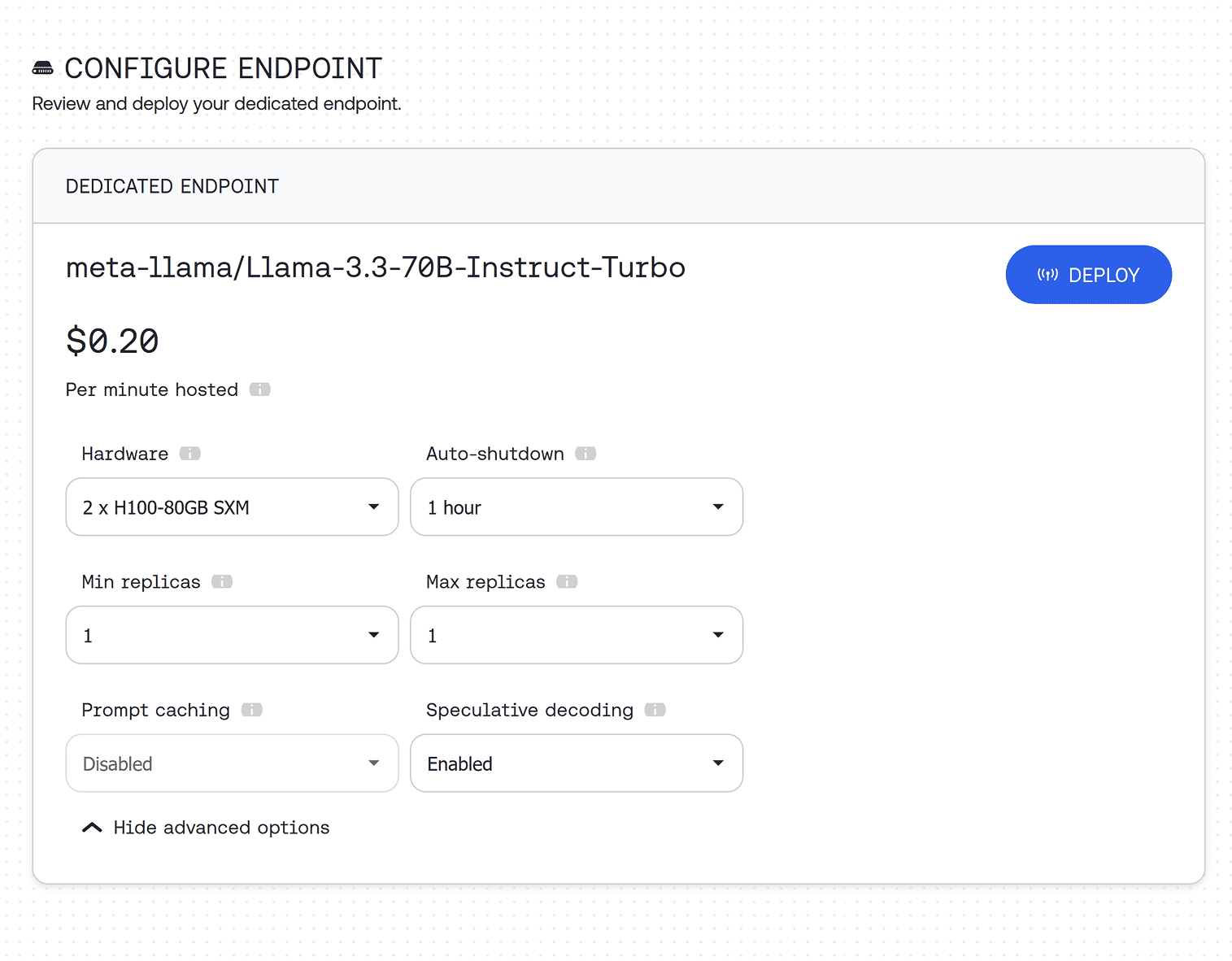

Looking for production scale? Deploy on a dedicated endpoint

Deploy BGE-Large-EN v1.5 on a dedicated endpoint with custom hardware configuration, as many instances as you need, and auto-scaling.