Large Language Models (LLMs) have revolutionized how we interact with and build conversational AI systems. While these models demonstrate impressive capabilities out of the box in general conversation, organizations face significant challenges when attempting to apply them to domain-specific business contexts.

Despite their broad capabilities, general-purpose LLMs face several key limitations:

- Domain Adaptation: Organizations often struggle with getting LLMs to understand their unique data formats and specific user interaction patterns.

- Knowledge Constraints: Base models have knowledge cutoffs and may lack specialized domain expertise and access to private enterprise documents.

- Multi-Turn Complexity: While base models handle single exchanges well, maintaining context and coherence across nuanced multistep conversations requires further specialized post-training.

This is where fine-tuning on your own data comes to the rescue.

Why Fine-Tuning Matters

Fine-tuning offers a solution to these challenges by allowing organizations to adapt off-the-shelf open models to their specific needs. Unlike pre-training, which involves processing vast amounts of low-quality general data, fine-tuning an already instruction-finetuned model is a more focused process that requires a much smaller, higher-quality labeled dataset of domain-specific examples.

In this article, we’ll talk specifically about multi-turn fine-tuning, whereby we can teach the model to maintain context across multiple exchanges while adhering to specific conversation patterns. The process helps models handle domain-specific queries with greater accuracy and ensures they respect guardrails that may be unique to your business context. This multi-turn capability is especially critical in scenarios like customer service, technical support, or complex multi-hop task completion, where a single exchange is rarely sufficient to address the user's needs.

Another practical example of multi-turn finetuning is the multi-turn function calling workflow. If you need an LLM to solve complex problems by using tools, you will need to train it to identify which sequence of tools to use one after the other and make decisions depending on the information obtained from the intermediate tool usage.

In this hands-on walkthrough, we will discuss the complete process of fine-tuning LLMs for multi-turn conversations. We’ll cover:

- Multi-turn conversation dataset preparation

- Loss masking in instruction tuning

- Example fine-tuning Llama 8B on a conversational dataset

We'll explore both theoretical concepts and practical implementation details, helping you create conversational AI systems that align with your organization's needs. Whether you're building a customer service bot that needs to maintain context across multiple interactions, or developing a specialized assistant that handles complex multi-step processes, understanding how to properly fine-tune LLMs for multi-turn conversations is critical.

If you would like to dive into code directly, please refer to the code notebook here.

Dataset Preparation

The most important and hardest part of successfully fine-tuning an LLM is proper dataset preparation. Thanks to services such as the Together Fine-Tuning API, the fine-tuning itself is now much easier than obtaining and preparing data that’s worth fine-tuning on! For multi-turn conversations, we need to structure our data to capture the back-and-forth nature of dialogue while ensuring the model learns to generate appropriate responses rather than memorizing entire conversations.

Key aspects of the dataset preparation:

- Proper conversation structure with clear turn delineation

- System messages to set the context

- Consistent role labeling (User/Assistant)

- JSONL format compatible with common fine-tuning frameworks

The dataset needs to be prepared using the chat format where every example in the JSONL file should be a list of "messages", and every message must have a "role" and "content". The "role" should be either "system", "user", or "assistant". You can read more about the format in our docs.

Once you have your dataset in the above format, we can upload the .jsonl to Together AI as shown below. Before uploading the dataset, we will also check the file to make sure it was formatted and prepared correctly.

Loss Masking in Instruction Fine-Tuning

A critical consideration when fine-tuning LLMs for conversational tasks is how to handle loss computation during training. Traditionally, many practitioners have followed the practice of masking instructions when calculating the loss function, but recent research suggests this might not always be optimal.

Loss masking in instruction fine-tuning refers to the practice of selectively including or excluding certain parts of the input when computing the training loss. There are typically three approaches:

- No Instruction Masking: The default approach where the loss is computed on all tokens, including both instructions and responses.

- Full Instruction Masking: The currently common approach where the loss is only computed on the response tokens, masking out all instruction tokens.

- Boilerplate Masking: A hybrid approach where only repetitive template text (like "Below is an instruction...") is masked while keeping both the instruction and the response content.

In the “Instruction Tuning With Loss Over Instructions” paper, authors challenged the conventional wisdom and showed that not masking instructions (except for special tokens) often leads to better model performance compared to the traditional masking approach. However, the effectiveness of this strategy isn't universal: it depends heavily on two key dataset characteristics, namely the ratio between instruction and response lengths and the overall size of the training dataset. These findings suggest that practitioners should carefully consider their specific use case and dataset properties when deciding on a masking strategy, rather than default to full instruction masking.

With the introduction of this new feature to the Together Fine-Tuning API, you can now select if you want loss masking to be performed for your fine-tuning job. The `train_on_inputs` parameter is newly introduced and allows:

- Enabling loss masking for a fine-tuning job by setting it to `False`;

- Disabling loss masking, the loss will be calculated on all tokens, by setting it to `True`;

- You may also set this to `”auto”`, which will enable/disable loss masking depending on the input dataset format.

To learn more about loss masking, please refer to our docs.

Real-World Example of Conversation Data Fine-tuning

In this section, we demonstrate how you can train your LLM to carry longer form discussions better by fine-tuning it on multi-step conversational data.

CoQA is a large-scale dataset for building Conversational Question Answering systems. The goal of the CoQA challenge is to measure the ability of machines to understand a text passage and answer a series of interconnected questions that appear in a conversation.

CoQA contains 127,000+ questions with answers collected from 8000+ conversations. Each conversation is collected by pairing two crowdworkers to chat about a passage in the form of questions and answers. CoQA has a lot of challenging phenomena not present in existing reading comprehension datasets, e.g., coreference and pragmatic reasoning.

The code below demonstrates how to convert the CoQA dataset to the conversational format expected by the Together Fine-Tuning API.

Create a fine-tuning job:

Once the job is launched, you’ll be able to see and track it on the dashboard:

Once the fine-tuning job is completed, you’ll be able to see the model on the job page:

Evaluating Performance

Once the model is fine-tuned, we can compare performance improvements on the CoQA validation set. For evaluation, CoQA uses two metrics: F1 score, which measures word overlap between predicted and ground truth answers, and Exact Match (EM), which requires the prediction to exactly match one of the ground truth answers. F1 is the primary metric, as it better handles free-form answers by giving partial credit for partially correct responses.

Below, you can see an example implementation of computing evaluation metrics on Together AI’s platform:

Deploy Model and Run Evals

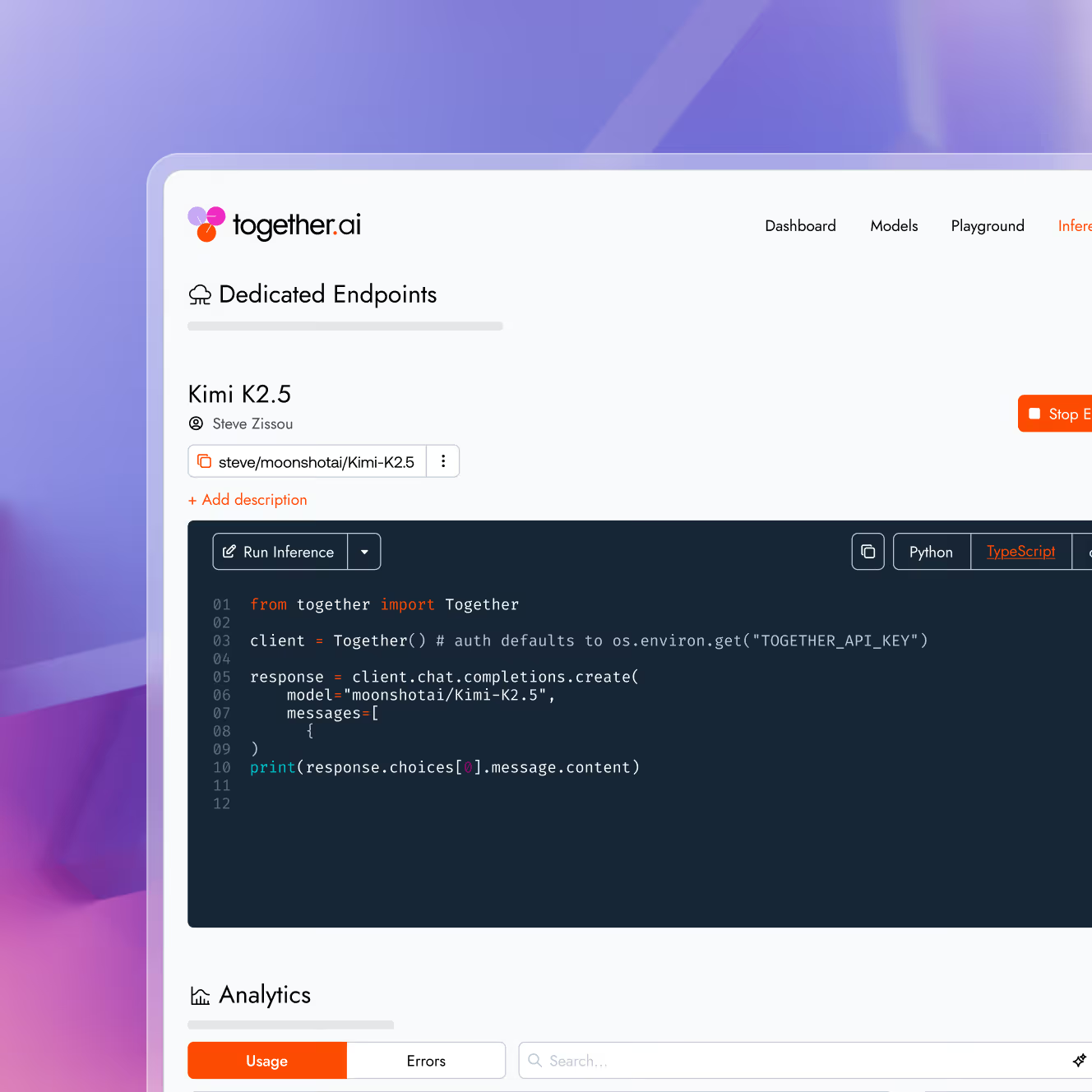

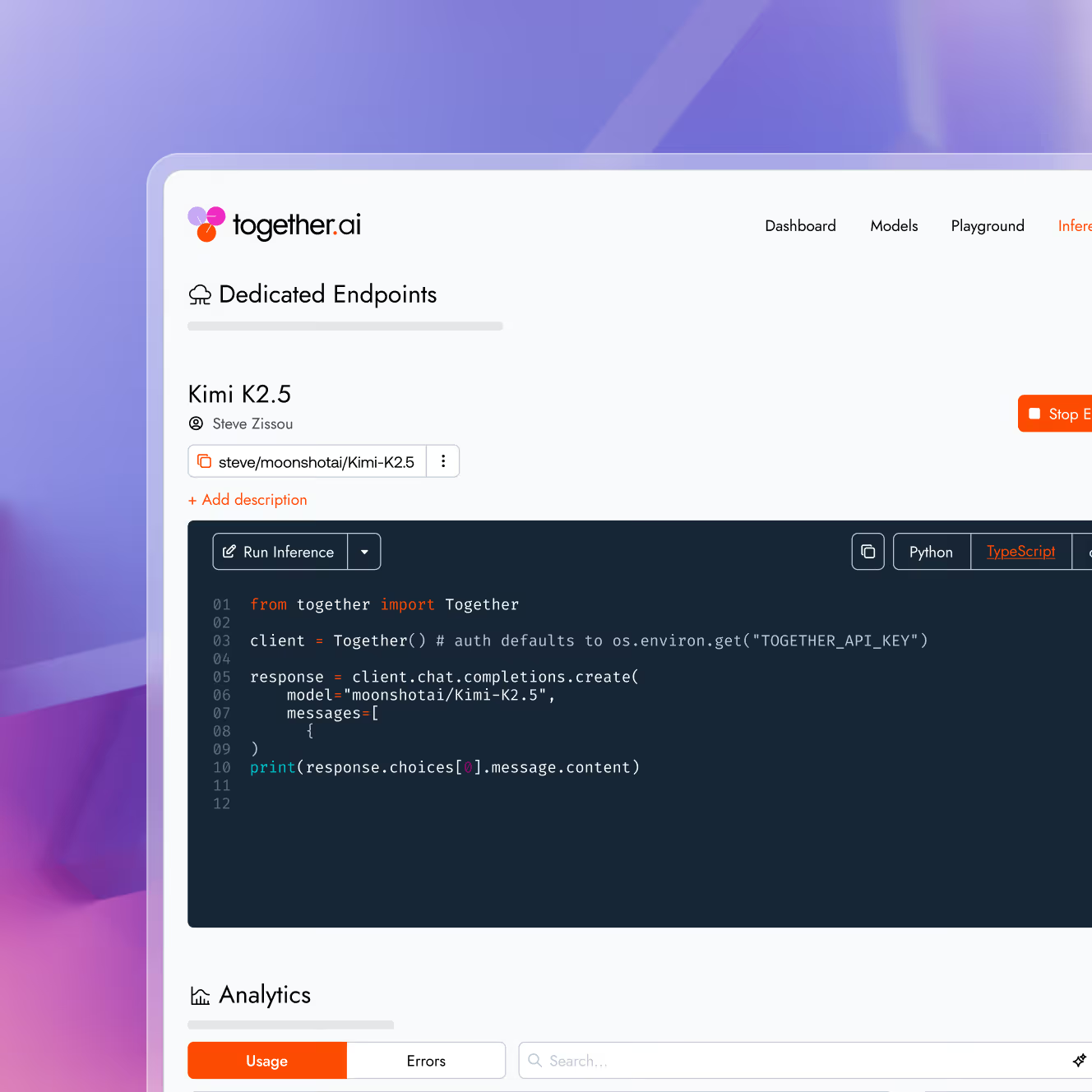

Before we can run the evaluations, we need to deploy our fine-tuned model as a Dedicated Endpoint. Access your model through the Together AI dashboard. Go to Models, select your fine-tuned model, and click Deploy. Choose from the available hardware options; we'll use a single A100-80GB GPU for this example.

We can now loop over models and obtain evaluation metrics:

For the evaluation above, we saw a marked improvement in Llama 3.1’s ability to address conversational questions. The exact match score increases ~12x, and the F1 score goes up ~3x after fine-tuning.

Conclusion

Fine-tuning LLMs for multi-turn conversations requires careful attention to dataset preparation, training implementation, and evaluation. By following these best practices, you can create effective conversational models while managing computational resources efficiently.

For optimal results:

- Start with high-quality conversation data

- Implement proper input masking

- Use parameter-efficient fine-tuning methods

- Monitor and evaluate throughout the process

Together Fine-Tuning API allows you to handle all the steps of fine-tuning — get started now by checking the docs here.

Audio Name

Audio Description

Performance & Scale

Body copy goes here lorem ipsum dolor sit amet

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

- Bullet point goes here lorem ipsum

Infrastructure

Best for

List Item #1

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

- Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt.

List Item #1

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

Build

Benefits included:

✔ Up to $15K in free platform credits*

✔ 3 hours of free forward-deployed engineering time.

Funding: Less than $5M

Build

Benefits included:

✔ Up to $15K in free platform credits*

✔ 3 hours of free forward-deployed engineering time.

Funding: Less than $5M

Build

Benefits included:

✔ Up to $15K in free platform credits*

✔ 3 hours of free forward-deployed engineering time.

Funding: Less than $5M

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, respond only in Arabic, no other language is allowed. Here is the question:

Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May?

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, respond with less than 860 words. Here is the question:

Recall that a palindrome is a number that reads the same forward and backward. Find the greatest integer less than $1000$ that is a palindrome both when written in base ten and when written in base eight, such as $292 = 444_{\\text{eight}}.$

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, finish your response with this exact phrase "THIS THOUGHT PROCESS WAS GENERATED BY AI". No other reasoning words should follow this phrase. Here is the question:

Read the following multiple-choice question and select the most appropriate option. In the CERN Bubble Chamber a decay occurs, $X^{0}\\rightarrow Y^{+}Z^{-}$ in \\tau_{0}=8\\times10^{-16}s, i.e. the proper lifetime of X^{0}. What minimum resolution is needed to observe at least 30% of the decays? Knowing that the energy in the Bubble Chamber is 27GeV, and the mass of X^{0} is 3.41GeV.

- A. 2.08*1e-1 m

- B. 2.08*1e-9 m

- C. 2.08*1e-6 m

- D. 2.08*1e-3 m

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, your response should be wrapped in JSON format. You can use markdown ticks such as ```. Here is the question:

Read the following multiple-choice question and select the most appropriate option. Trees most likely change the environment in which they are located by

- A. releasing nitrogen in the soil.

- B. crowding out non-native species.

- C. adding carbon dioxide to the atmosphere.

- D. removing water from the soil and returning it to the atmosphere.

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, your response should be in English and in all capital letters. Here is the question:

Among the 900 residents of Aimeville, there are 195 who own a diamond ring, 367 who own a set of golf clubs, and 562 who own a garden spade. In addition, each of the 900 residents owns a bag of candy hearts. There are 437 residents who own exactly two of these things, and 234 residents who own exactly three of these things. Find the number of residents of Aimeville who own all four of these things.

Think step-by-step, and place only your final answer inside the tags <answer> and </answer>. Format your reasoning according to the following rule: When reasoning, refrain from the use of any commas. Here is the question:

Alexis is applying for a new job and bought a new set of business clothes to wear to the interview. She went to a department store with a budget of $200 and spent $30 on a button-up shirt, $46 on suit pants, $38 on a suit coat, $11 on socks, and $18 on a belt. She also purchased a pair of shoes, but lost the receipt for them. She has $16 left from her budget. How much did Alexis pay for the shoes?