Together GPU Clusters

Reliable self-serve, AI-ready GPU clusters at scale

Go from zero to production in minutes. Bare-metal performance, InfiniBand networking, and managed orchestration — with flexible pricing for both on-demand and reserved capacity.

Why Together GPU Clusters

Infrastructure that keeps long-running jobs on track — with automated recovery, elastic scale, and zero DevOps overhead.

90% faster training on Blackwell

NVIDIA HGX B200 with Together Kernel Collection delivers 90% faster BF16 training than optimized Hopper.

Automatic recovery

When hardware fails, automated remediation restores capacity — no support tickets, no manual intervention, keeping long-running jobs on track with predictable recovery behavior.

Scale from 8 to 4,000+ GPUs

Managed orchestration and elasticity — start small for experimentation and programmatically scale your AI applications, GPUs and storage as you move to production.

Everything you need to train at scale

Managed infrastructure with built-in observability, orchestration flexibility, and research-grade performance.

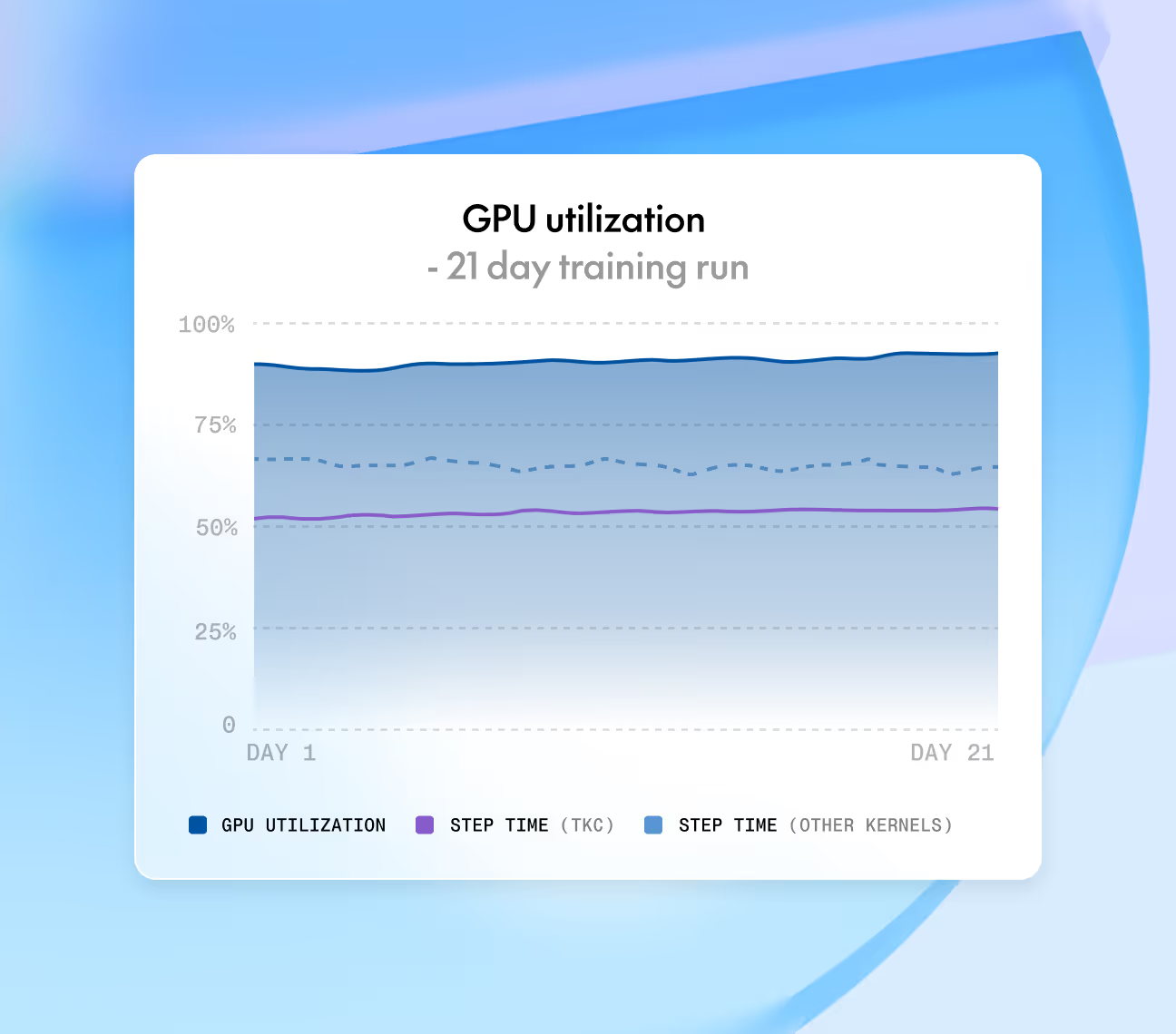

Maintain high utilization across multi-week training runs and model serving. Kernel, hardware, and storage acceleration reduce stragglers and keep latencies predictable.

Scale compute and storage seamlessly from experiments to production over high-speed InfiniBand. Keep capacity online automatically using continuous health checks, automated remediation, and self-serve node repair.

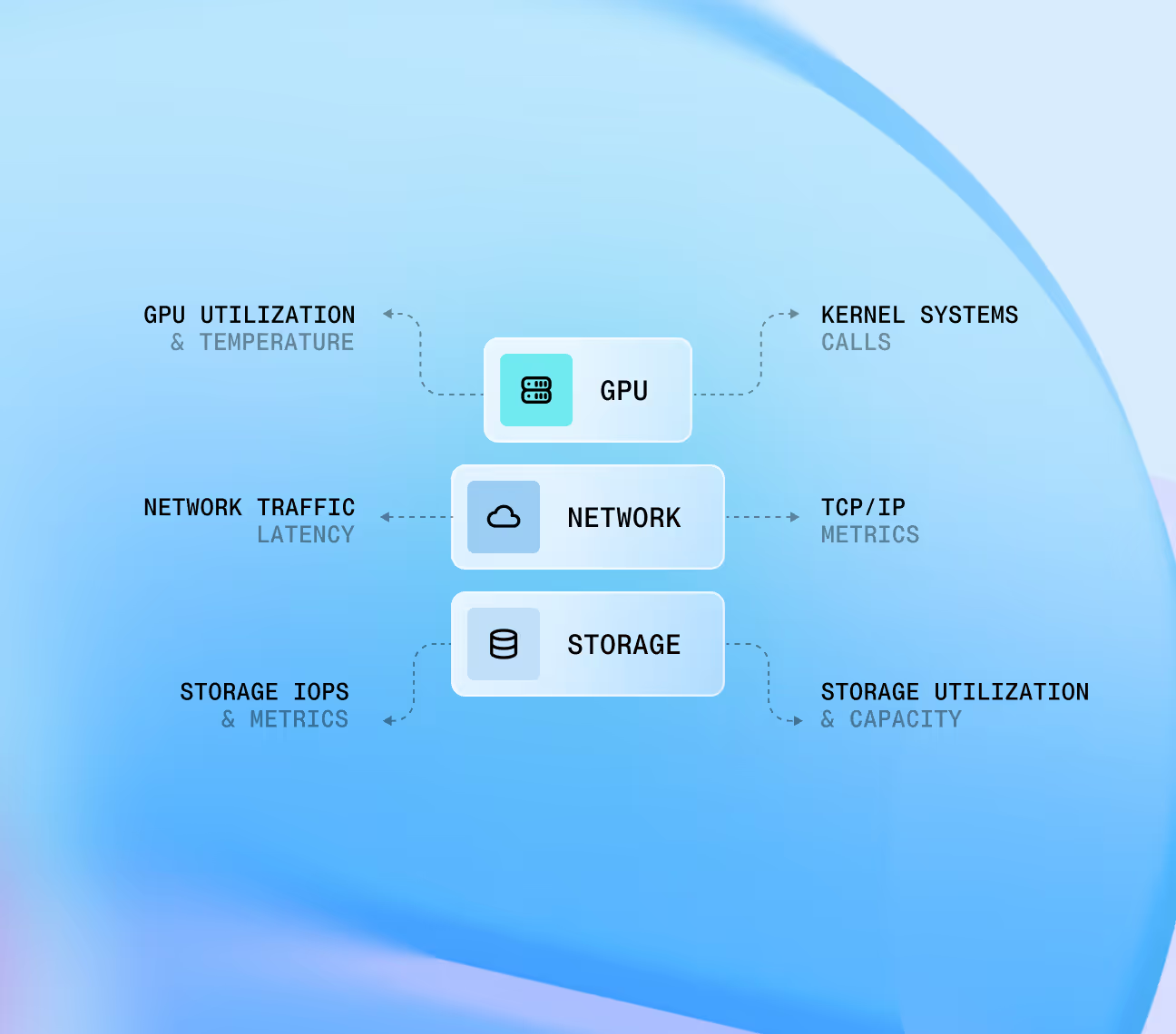

Monitor workloads instantly using pre-built Grafana dashboards and full-stack metrics across GPUs, storage, networking, and Kubernetes. Gain complete system visibility without writing custom instrumentation.

Provision clusters instantly with pre-configured tooling alongside selectable drivers and CUDA versions. Manage cross-team access via project-level RBAC using the CLI, SDK, API, Terraform, or the web console.

Frontier research-powered training performance

The Together Kernel Collection, built by our Chief Scientist Tri Dao (creator of FlashAttention), delivers improved training and inference performance.

AI Training Performance: NVIDIA Hopper to Blackwell, with TKC

TKC vs SOTA Approaches

90% faster training

Training a 70B parameter Llama-architecture model (BF16) with an optimized TorchTitan + Together Kernel Collection (TKC) reached 15,264 tokens/second/GPU on NVIDIA HGX B200, up from 8,080 tokens/second on NVIDIA HGX H100—a 90% jump in training speed.

learn moreFP8 GEMM Performance (M x N x K)

- ThunderKittens B200

- cuBLAS H100

- cuBLASB200

ThunderKittens vs cuBLAS

~2× faster

ThunderKittens’ FP8 kernel for NVIDIA HGX B200 matches NVIDIA cuBLAS GEMM performance while delivering ~2× speedup over H100 FP8 GEMMs, leveraging Blackwell’s Tensor Core–accelerated matrix operations.

learn more

Fully managed, high-performance shared filesystems for faster training and innovation cycle

Provision and attach shared storage volumes for your GPU clusters to store and persist your training data, model weights — ensure your GPUs do not starve for data.

Weka excels at high IOPS workloads

Weka excels at high IOPS workloadsWith strong metadata performance. It scored 826.86 on IO500 and delivers sub 200 microsecond latency. Heavy small file operations and metadata intensive tasks like checkpoint discovery across hundreds of training ranks.

VAST simplifies operations

VAST simplifies operationsVAST's disaggregated architecture separates compute from storage for straightforward capacity expansion and a unified namespace. Built for enterprise environments where operational simplicity and broad feature coverage matter most.

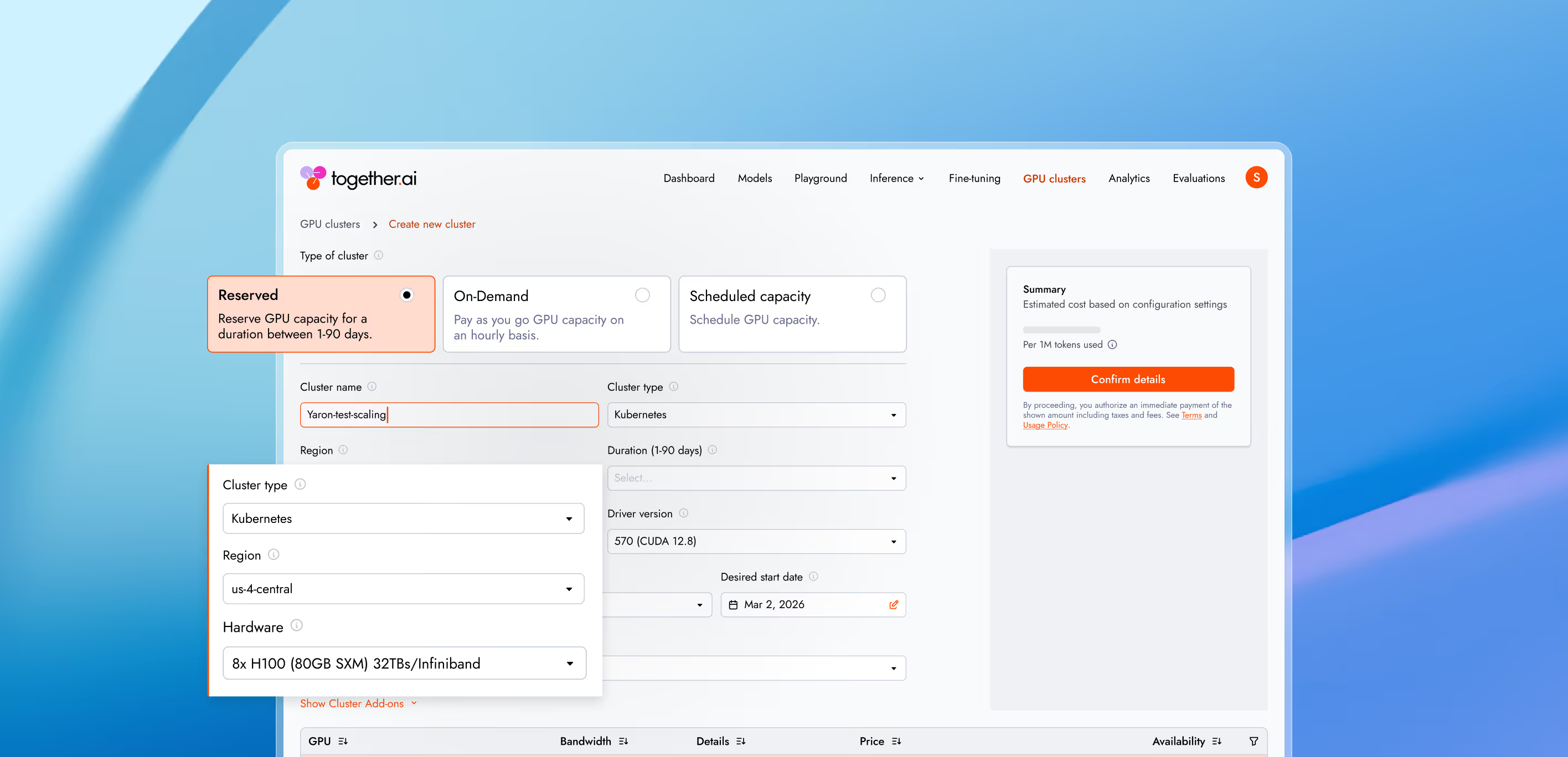

Flexible pricing models

Both options are fully self-serve. Choose based on your capacity requirements and commitment level.

- On-DemandStandard hourly rateCommitmentNone—pay hourly, terminate anytimeBest forStarting with on-demand for flexibilityCapacityBased on real-time availabilityScaleUp to 256 GPUsCreate now

- ReservedLower hourly rateCommitmentUp to 6 months, pay upfrontBest forGuaranteed access with better economicsCapacityLocked in for your durationScaleUp to 4,000+ GPUsReserve capacity

Choose your cluster configuration

Self-serve GPUs with transparent per-GPU billing.

Orchestration flexibility for your AI workloads

Self-serve GPUs with hourly pricing per GPU.

- Managed KubernetesFor training and inferenceKubeadm-based upstream-compliant K8sNode autoscaling for elastic computeManaged Grafana for observabilityFlexible ingress configuration for inferenceHA control plane with managed upgradesCert Manager for HTTPS endpointsGet started

- Slurm on KubernetesFor training workloadsPrecise hardware control and gang schedulingSubmit jobs via srun, sbatchDirect SSH access with Slurm simplicity and K8s-backed resilienceEssential for distributed training synchronizationGet started

Regions and availability zones

Launch close to your users and data across 25+ cities.

- USA2GW+ in the portfolio with 600MW of near-term capacity in US.

- Europe150 MW+ available in Europe: UK, Spain, France, Portugal, and Iceland also.

- Asia & Middle EastOptions available based on the scale of the projects in Asia and the Middle East.

Choose from global regions to meet data residency and compliance requirements—HIPAA for healthcare, GDPR for Europe, or banking regulations.

Infrastructure you can trust at scale.

Production-grade security.

We take security and compliance seriously, with strict data privacy controls to keep your information protected. Your data and models remain fully under your ownership, safeguarded by robust security measures.

NVIDIA preferred partner

NVIDIA preferred partner- AICPA SOC 2 Type II